(+30) 210772-2420, 2964

- filby@central.ntua.gr

- Office 2.2.19

Biosketch

I am a PostDoctoral researcher at the National Technical University of Athens under the supervision of Prof. Petros Maragos. I work at the crossroads of computer vision and audio processing for affective computing. Some of my most recent endeavors include body emotion recognition, visual emotion translation, and 3D visual speech-aware facial expression reconstruction.

Recent Research Projects

Publications

2019 |

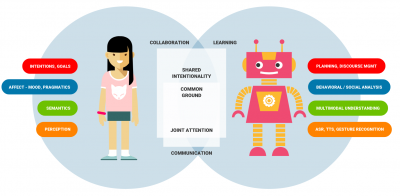

P P Filntisis, N Efthymiou, P Koutras, G Potamianos, P Maragos Fusing Body Posture With Facial Expressions for Joint Recognition of Affect in Child–Robot Interaction Journal Article IEEE Robotics and Automation Letters (with IROS option), 4 (4), pp. 4011-4018, 2019. Abstract | BibTeX | Links: [PDF] @article{8769871, title = {Fusing Body Posture With Facial Expressions for Joint Recognition of Affect in Child–Robot Interaction}, author = {P P Filntisis and N Efthymiou and P Koutras and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/RAL_2019-5.pdf}, doi = {10.1109/LRA.2019.2930434}, year = {2019}, date = {2019-10-01}, journal = {IEEE Robotics and Automation Letters (with IROS option)}, volume = {4}, number = {4}, pages = {4011-4018}, abstract = {In this letter, we address the problem of multi-cue affect recognition in challenging scenarios such as child–robot interaction. Toward this goal we propose a method for automatic recognition of affect that leverages body expressions alongside facial ones, as opposed to traditional methods that typically focus only on the latter. Our deep-learning based method uses hierarchical multi-label annotations and multi-stage losses, can be trained both jointly and separately, and offers us computational models for both individual modalities, as well as for the whole body emotion. We evaluate our method on a challenging child–robot interaction database of emotional expressions collected by us, as well as on the GEneva multimodal emotion portrayal public database of acted emotions by adults, and show that the proposed method achieves significantly better results than facial-only expression baselines.}, keywords = {}, pubstate = {published}, tppubtype = {article} } In this letter, we address the problem of multi-cue affect recognition in challenging scenarios such as child–robot interaction. Toward this goal we propose a method for automatic recognition of affect that leverages body expressions alongside facial ones, as opposed to traditional methods that typically focus only on the latter. Our deep-learning based method uses hierarchical multi-label annotations and multi-stage losses, can be trained both jointly and separately, and offers us computational models for both individual modalities, as well as for the whole body emotion. We evaluate our method on a challenging child–robot interaction database of emotional expressions collected by us, as well as on the GEneva multimodal emotion portrayal public database of acted emotions by adults, and show that the proposed method achieves significantly better results than facial-only expression baselines. |

2018 |

N. Efthymiou, P. Koutras, P. ~P. Filntisis, G. Potamianos, P. Maragos MULTI-VIEW FUSION FOR ACTION RECOGNITION IN CHILD-ROBOT INTERACTION Conference Proc. IEEE Int'l Conf. on Image Processing, Athens, Greece, 2018. Abstract | BibTeX | Links: [PDF] @conference{efthymiou18action, title = {MULTI-VIEW FUSION FOR ACTION RECOGNITION IN CHILD-ROBOT INTERACTION}, author = { N. Efthymiou and P. Koutras and P. ~P. Filntisis and G. Potamianos and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/EfthymiouKoutrasFilntisis_MultiViewFusActRecognChildRobotInteract_ICIP18.pdf}, year = {2018}, date = {2018-10-01}, booktitle = {Proc. IEEE Int'l Conf. on Image Processing}, address = {Athens, Greece}, abstract = {Answering the challenge of leveraging computer vision methods in order to enhance Human Robot Interaction (HRI) experience, this work explores methods that can expand the capabilities of an action recognition system in such tasks. A multi-view action recognition system is proposed for integration in HRI scenarios with special users, such as children, in which there is limited data for training and many state-of-the-art techniques face difficulties. Different feature extraction approaches, encoding methods and fusion techniques are combined and tested in order to create an efficient system that recognizes children pantomime actions. This effort culminates in the integration of a robotic platform and is evaluated under an alluring Children Robot Interaction scenario.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Answering the challenge of leveraging computer vision methods in order to enhance Human Robot Interaction (HRI) experience, this work explores methods that can expand the capabilities of an action recognition system in such tasks. A multi-view action recognition system is proposed for integration in HRI scenarios with special users, such as children, in which there is limited data for training and many state-of-the-art techniques face difficulties. Different feature extraction approaches, encoding methods and fusion techniques are combined and tested in order to create an efficient system that recognizes children pantomime actions. This effort culminates in the integration of a robotic platform and is evaluated under an alluring Children Robot Interaction scenario. |

A Tsiami, P Koutras, Niki Efthymiou, P P Filntisis, G Potamianos, P Maragos Multi3: Multi-sensory Perception System for Multi-modal Child Interaction with Multiple Robots Conference IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 2018. Abstract | BibTeX | Links: [PDF] @conference{multi3, title = {Multi3: Multi-sensory Perception System for Multi-modal Child Interaction with Multiple Robots}, author = {A Tsiami and P Koutras and Niki Efthymiou and P P Filntisis and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/2018_TsiamiEtAl_Multi3-MultisensorMultimodalChildInteractMultRobots_ICRA.pdf}, year = {2018}, date = {2018-05-01}, booktitle = {IEEE International Conference on Robotics and Automation (ICRA)}, address = {Brisbane, Australia}, abstract = {Child-robot interaction is an interdisciplinary research area that has been attracting growing interest, primarily focusing on edutainment applications. A crucial factor to the successful deployment and wide adoption of such applications remains the robust perception of the child's multimodal actions, when interacting with the robot in a natural and untethered fashion. Since robotic sensory and perception capabilities are platform-dependent and most often rather limited, we propose a multiple Kinect-based system to perceive the child-robot interaction scene that is robot-independent and suitable for indoors interaction scenarios. The audio-visual input from the Kinect sensors is fed into speech, gesture, and action recognition modules, appropriately developed in this paper to address the challenging nature of child-robot interaction. For this purpose, data from multiple children are collected and used for module training or adaptation. Further, information from the multiple sensors is fused to enhance module performance. The perception system is integrated in a modular multi-robot architecture demonstrating its flexibility and scalability with different robotic platforms. The whole system, called Multi3, is evaluated, both objectively at the module level and subjectively in its entirety, under appropriate child-robot interaction scenarios containing several carefully designed games between children and robots.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Child-robot interaction is an interdisciplinary research area that has been attracting growing interest, primarily focusing on edutainment applications. A crucial factor to the successful deployment and wide adoption of such applications remains the robust perception of the child's multimodal actions, when interacting with the robot in a natural and untethered fashion. Since robotic sensory and perception capabilities are platform-dependent and most often rather limited, we propose a multiple Kinect-based system to perceive the child-robot interaction scene that is robot-independent and suitable for indoors interaction scenarios. The audio-visual input from the Kinect sensors is fed into speech, gesture, and action recognition modules, appropriately developed in this paper to address the challenging nature of child-robot interaction. For this purpose, data from multiple children are collected and used for module training or adaptation. Further, information from the multiple sensors is fused to enhance module performance. The perception system is integrated in a modular multi-robot architecture demonstrating its flexibility and scalability with different robotic platforms. The whole system, called Multi3, is evaluated, both objectively at the module level and subjectively in its entirety, under appropriate child-robot interaction scenarios containing several carefully designed games between children and robots. |

A Tsiami, P P Filntisis, N Efthymiou, P Koutras, G Potamianos, P Maragos FAR-FIELD AUDIO-VISUAL SCENE PERCEPTION OF MULTI-PARTY HUMAN-ROBOT INTERACTION FOR CHILDREN AND ADULTS Conference Proc. IEEE Int'l Conf. Acous., Speech, and Signal Processing (ICASSP), Calgary, Canada, 2018. Abstract | BibTeX | Links: [PDF] @conference{tsiamifar, title = {FAR-FIELD AUDIO-VISUAL SCENE PERCEPTION OF MULTI-PARTY HUMAN-ROBOT INTERACTION FOR CHILDREN AND ADULTS}, author = {A Tsiami and P P Filntisis and N Efthymiou and P Koutras and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/2018_TsiamiEtAl_FarfieldAVperceptionHRI-ChildrenAdults_ICASSP.pdf}, year = {2018}, date = {2018-04-01}, booktitle = {Proc. IEEE Int'l Conf. Acous., Speech, and Signal Processing (ICASSP)}, address = {Calgary, Canada}, abstract = {Human-robot interaction (HRI) is a research area of growing interest with a multitude of applications for both children and adult user groups, as, for example, in edutainment and social robotics. Crucial, however, to its wider adoption remains the robust perception of HRI scenes in natural, untethered, and multi-party interaction scenarios, across user groups. Towards this goal, we investigate three focal HRI perception modules operating on data from multiple audio-visual sensors that observe the HRI scene from the far-field, thus bypassing limitations and platform-dependency of contemporary robotic sensing. In particular, the developed modules fuse intra- and/or inter-modality data streams to perform: (i) audio-visual speaker localization; (ii) distant speech recognition; and (iii) visual recognition of hand-gestures. Emphasis is also placed on ensuring high speech and gesture recognition rates for both children and adults. Development and objective evaluation of the three modules is conducted on a corpus of both user groups, collected by our far-field multi-sensory setup, for an interaction scenario of a question-answering ``guess-the-object'' collaborative HRI game with a ``Furhat'' robot. In addition, evaluation of the game incorporating the three developed modules is reported. Our results demonstrate robust far-field audio-visual perception of the multi-party HRI scene.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Human-robot interaction (HRI) is a research area of growing interest with a multitude of applications for both children and adult user groups, as, for example, in edutainment and social robotics. Crucial, however, to its wider adoption remains the robust perception of HRI scenes in natural, untethered, and multi-party interaction scenarios, across user groups. Towards this goal, we investigate three focal HRI perception modules operating on data from multiple audio-visual sensors that observe the HRI scene from the far-field, thus bypassing limitations and platform-dependency of contemporary robotic sensing. In particular, the developed modules fuse intra- and/or inter-modality data streams to perform: (i) audio-visual speaker localization; (ii) distant speech recognition; and (iii) visual recognition of hand-gestures. Emphasis is also placed on ensuring high speech and gesture recognition rates for both children and adults. Development and objective evaluation of the three modules is conducted on a corpus of both user groups, collected by our far-field multi-sensory setup, for an interaction scenario of a question-answering ``guess-the-object'' collaborative HRI game with a ``Furhat'' robot. In addition, evaluation of the game incorporating the three developed modules is reported. Our results demonstrate robust far-field audio-visual perception of the multi-party HRI scene. |

2017 |

Panagiotis Paraskevas Filntisis, Athanasios Katsamanis, Pirros Tsiakoulis, Petros Maragos Video-realistic expressive audio-visual speech synthesis for the Greek language Journal Article Speech Communication, 95 , pp. 137–152, 2017, ISSN: 01676393. Abstract | BibTeX | Links: [PDF] @article{345, title = {Video-realistic expressive audio-visual speech synthesis for the Greek language}, author = {Panagiotis Paraskevas Filntisis and Athanasios Katsamanis and Pirros Tsiakoulis and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/publications/FilntisisKatsamanisTsiakoulis+_VideoRealExprAudioVisSpeechSynthGrLang_SC17.pdf}, doi = {10.1016/j.specom.2017.08.011}, issn = {01676393}, year = {2017}, date = {2017-01-01}, journal = {Speech Communication}, volume = {95}, pages = {137--152}, abstract = {High quality expressive speech synthesis has been a long-standing goal towards natural human-computer interaction. Generating a talking head which is both realistic and expressive appears to be a considerable challenge, due to both the high complexity in the acoustic and visual streams and the large non-discrete number of emotional states we would like the talking head to be able to express. In order to cover all the desired emotions, a significant amount of data is required, which poses an additional time-consuming data collection challenge. In this paper we attempt to address the aforementioned problems in an audio-visual context. Towards this goal, we propose two deep neural network (DNN) architectures for Video-realistic Expressive Audio-Visual Text-To-Speech synthesis (EAVTTS) and evaluate them by comparing them directly both to traditional hidden Markov model (HMM) based EAVTTS, as well as a concatenative unit selection EAVTTS approach, both on the realism and the expressiveness of the generated talking head. Next, we investigate adaptation and interpolation techniques to address the problem of covering the large emotional space. We use HMM interpolation in order to generate different levels of intensity for an emotion, as well as investigate whether it is possible to generate speech with intermediate speaking styles between two emotions. In addition, we employ HMM adaptation to adapt an HMM-based system to another emotion using only a limited amount of adaptation data from the target emotion. We performed an extensive experimental evaluation on a medium sized audio-visual corpus covering three emotions, namely anger, sadness and happiness, as well as neutral reading style. Our results show that DNN-based models outperform HMMs and unit selection on both the realism and expressiveness of the generated talking heads, while in terms of adaptation we can successfully adapt an audio-visual HMM set trained on a neutral speaking style database to a target emotion. Finally, we show that HMM interpolation can indeed generate different levels of intensity for EAVTTS by interpolating an emotion with the neutral reading style, as well as in some cases, generate audio-visual speech with intermediate expressions between two emotions.}, keywords = {}, pubstate = {published}, tppubtype = {article} } High quality expressive speech synthesis has been a long-standing goal towards natural human-computer interaction. Generating a talking head which is both realistic and expressive appears to be a considerable challenge, due to both the high complexity in the acoustic and visual streams and the large non-discrete number of emotional states we would like the talking head to be able to express. In order to cover all the desired emotions, a significant amount of data is required, which poses an additional time-consuming data collection challenge. In this paper we attempt to address the aforementioned problems in an audio-visual context. Towards this goal, we propose two deep neural network (DNN) architectures for Video-realistic Expressive Audio-Visual Text-To-Speech synthesis (EAVTTS) and evaluate them by comparing them directly both to traditional hidden Markov model (HMM) based EAVTTS, as well as a concatenative unit selection EAVTTS approach, both on the realism and the expressiveness of the generated talking head. Next, we investigate adaptation and interpolation techniques to address the problem of covering the large emotional space. We use HMM interpolation in order to generate different levels of intensity for an emotion, as well as investigate whether it is possible to generate speech with intermediate speaking styles between two emotions. In addition, we employ HMM adaptation to adapt an HMM-based system to another emotion using only a limited amount of adaptation data from the target emotion. We performed an extensive experimental evaluation on a medium sized audio-visual corpus covering three emotions, namely anger, sadness and happiness, as well as neutral reading style. Our results show that DNN-based models outperform HMMs and unit selection on both the realism and expressiveness of the generated talking heads, while in terms of adaptation we can successfully adapt an audio-visual HMM set trained on a neutral speaking style database to a target emotion. Finally, we show that HMM interpolation can indeed generate different levels of intensity for EAVTTS by interpolating an emotion with the neutral reading style, as well as in some cases, generate audio-visual speech with intermediate expressions between two emotions. |