(+30) 210-772-1528

- nkardaris@mail.ntua.gr

- Office 2.1.12

Biosketch

I was born in Athens in 1990. I received my Diploma degree in Electrical and Computer Engineering from the National Technical University of Athens. My diploma thesis was about automatic action and gesture recognition with application on human-robot interaction.

Since October 2017 I am a PhD student in the CVSP Group, school of ECE, NTUA, under the supervision of Prof. Petros Maragos, working in the general area of computer vision and visual understanding. My research interests lie primarily in the field of activity recognition, deep learning and human-robot interaction.

Publications

2017 |

A Zlatintsi, I Rodomagoulakis, V Pitsikalis, P Koutras, N Kardaris, X Papageorgiou, C Tzafestas, P Maragos Social Human-Robot Interaction for the Elderly: Two Real-life Use Cases, Conference ACM/IEEE International Conference on Human-Robot Interaction (HRI), Vienna, Austria, 2017. Abstract | BibTeX | Links: [PDF] @conference{ZRP+17, title = {Social Human-Robot Interaction for the Elderly: Two Real-life Use Cases,}, author = {A Zlatintsi and I Rodomagoulakis and V Pitsikalis and P Koutras and N Kardaris and X Papageorgiou and C Tzafestas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/Zlatintsi+_SocialHRIforTheElderly_HRI-17.pdf}, year = {2017}, date = {2017-03-01}, booktitle = {ACM/IEEE International Conference on Human-Robot Interaction (HRI)}, address = {Vienna, Austria}, abstract = {We explore new aspects on assistive living via smart social human-robot interaction (HRI) involving automatic recognition of multimodal gestures and speech in a natural interface, providing social features in HRI. We discuss a whole framework of resources, including datasets and tools, briefly shown in two real-life use cases for elderly subjects: a multimodal interface of an assistive robotic rollator and an assistive bathing robot. We discuss these domain specific tasks, and open source tools, which can be used to build such HRI systems, as well as indicative results. Sharing such resources can open new perspectives in assistive HRI.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } We explore new aspects on assistive living via smart social human-robot interaction (HRI) involving automatic recognition of multimodal gestures and speech in a natural interface, providing social features in HRI. We discuss a whole framework of resources, including datasets and tools, briefly shown in two real-life use cases for elderly subjects: a multimodal interface of an assistive robotic rollator and an assistive bathing robot. We discuss these domain specific tasks, and open source tools, which can be used to build such HRI systems, as well as indicative results. Sharing such resources can open new perspectives in assistive HRI. |

2016 |

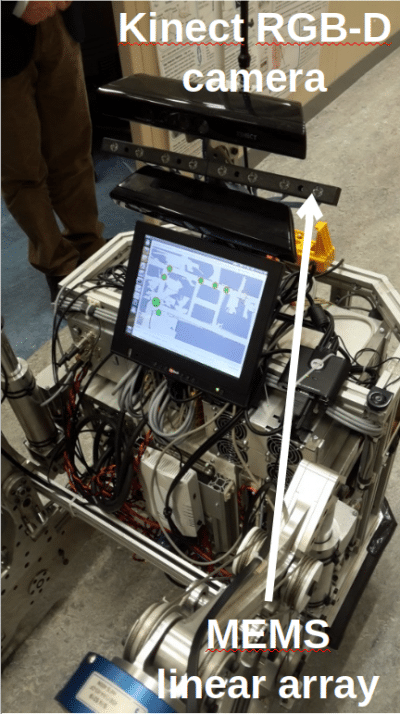

N Kardaris, I Rodomagoulakis, V Pitsikalis, A Arvanitakis, P Maragos A Platform for Building New Human-Computer Interface Systems that Support Online Automatic Recognition of Audio-Gestural Commands Conference Proceedings of the 2017 ACM on Multimedia Conference, Amsterdam, The Netherlands, 2016. Abstract | BibTeX | Links: [PDF] @conference{acm_kardaris_2016, title = {A Platform for Building New Human-Computer Interface Systems that Support Online Automatic Recognition of Audio-Gestural Commands}, author = {N Kardaris and I Rodomagoulakis and V Pitsikalis and A Arvanitakis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/KPMM_TemporalOrderForVisualWords-ActionRecognition_ICIP2016.pdf}, doi = {10.1145/2964284.2973794}, year = {2016}, date = {2016-10-01}, booktitle = {Proceedings of the 2017 ACM on Multimedia Conference}, address = {Amsterdam, The Netherlands}, abstract = {We introduce a new framework to build human-computer interfaces that provide online automatic audio-gestural command recognition. The overall system allows the construction of a multimodal interface that recognizes user input expressed naturally as audio commands and manual gestures, captured by sensors such as Kinect. It includes a component for acquiring multimodal user data which is used as input to a module responsible for training audio-gestural models. These models are employed by the automatic recognition component, which supports online recognition of audiovisual modalities. The overall framework is exemplified by a working system use case. This demonstrates the potential of the overall software platform, which can be employed to build other new human-computer interaction systems. Moreover, users may populate libraries of models and/or data that can be shared in the network. In this way users may reuse or extend existing systems.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } We introduce a new framework to build human-computer interfaces that provide online automatic audio-gestural command recognition. The overall system allows the construction of a multimodal interface that recognizes user input expressed naturally as audio commands and manual gestures, captured by sensors such as Kinect. It includes a component for acquiring multimodal user data which is used as input to a module responsible for training audio-gestural models. These models are employed by the automatic recognition component, which supports online recognition of audiovisual modalities. The overall framework is exemplified by a working system use case. This demonstrates the potential of the overall software platform, which can be employed to build other new human-computer interaction systems. Moreover, users may populate libraries of models and/or data that can be shared in the network. In this way users may reuse or extend existing systems. |

A Guler, N Kardaris, S Chandra, V Pitsikalis, C Werner, K Hauer, C Tzafestas, P Maragos, I Kokkinos Human Joint Angle Estimation and Gesture Recognition for Assistive Robotic Vision Conference Proc. of Workshop on Assistive Computer Vision and Robotics, European Conf. on Computer Vision (ECCV-2016), Amsterdam, The Netherlands, 2016. Abstract | BibTeX | Links: [PDF] @conference{guler_joint_gesture_2016, title = {Human Joint Angle Estimation and Gesture Recognition for Assistive Robotic Vision}, author = {A Guler and N Kardaris and S Chandra and V Pitsikalis and C Werner and K Hauer and C Tzafestas and P Maragos and I Kokkinos}, url = {http://robotics.ntua.gr/wp-content/publications/PoseEstimGestureRecogn-AssistRobotVision_ACVR2016-ECCV-Workshop.pdf}, doi = {10.1007/978-3-319-48881-3_29}, year = {2016}, date = {2016-10-01}, booktitle = {Proc. of Workshop on Assistive Computer Vision and Robotics, European Conf. on Computer Vision (ECCV-2016)}, address = {Amsterdam, The Netherlands}, abstract = {We explore new directions for automatic human gesture recognition and human joint angle estimation as applied for human-robot interaction in the context of an actual challenging task of assistive living for real-life elderly subjects. Our contributions include state-of-the-art approaches for both low- and mid-level vision, as well as for higher level action and gesture recognition. The first direction investigates a deep learning based framework for the challenging task of human joint angle estimation on noisy real world RGB-D images. The second direction includes the employment of dense trajectory features for online processing of videos for automatic gesture recognition with real-time performance. Our approaches are evaluated both qualitative and quantitatively on a newly acquired dataset that is constructed on a challenging real-life scenario on assistive living for elderly subjects.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } We explore new directions for automatic human gesture recognition and human joint angle estimation as applied for human-robot interaction in the context of an actual challenging task of assistive living for real-life elderly subjects. Our contributions include state-of-the-art approaches for both low- and mid-level vision, as well as for higher level action and gesture recognition. The first direction investigates a deep learning based framework for the challenging task of human joint angle estimation on noisy real world RGB-D images. The second direction includes the employment of dense trajectory features for online processing of videos for automatic gesture recognition with real-time performance. Our approaches are evaluated both qualitative and quantitatively on a newly acquired dataset that is constructed on a challenging real-life scenario on assistive living for elderly subjects. |

N Kardaris, V Pitsikalis, E Mavroudi, P Maragos Introducing Temporal Order of Dominant Visual Word Sub-Sequences for Human Action Recognition Conference Proc. of IEEE Int'l Conf. on Image Processing (ICIP-2016), Phoenix, AZ, USA, 2016. Abstract | BibTeX | Links: [PDF] @conference{acm_kardaris_2016b, title = {Introducing Temporal Order of Dominant Visual Word Sub-Sequences for Human Action Recognition}, author = {N Kardaris and V Pitsikalis and E Mavroudi and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/KRPAM_BuildingMultimodalInterfaces_ACM-MM2016.pdf}, doi = {10.1109/ICIP.2016.7532922}, year = {2016}, date = {2016-09-01}, booktitle = {Proc. of IEEE Int'l Conf. on Image Processing (ICIP-2016)}, address = {Phoenix, AZ, USA}, abstract = {We present a novel video representation for human action recognition by considering temporal sequences of visual words. Based on state-of-the-art dense trajectories, we introduce temporal bundles of dominant, that is most frequent, visual words. These are employed to construct a complementary action representation of ordered dominant visual word sequences, that additionally incorporates fine-grained temporal information. We exploit the introduced temporal information by applying local sub-sequence alignment that quantifies the similarity between sequences. This facilitates the fusion of our representation with the bag-of-visual-words (BoVW) representation. Our approach incorporates sequential temporal structure and results in a low-dimensional representation compared to the BoVW, while still yielding a descent result when combined with it. Experiments on the KTH, Hollywood2 and the challenging HMDB51 datasets show that the proposed framework is complementary to the BoVW representation, which discards temporal order}, keywords = {}, pubstate = {published}, tppubtype = {conference} } We present a novel video representation for human action recognition by considering temporal sequences of visual words. Based on state-of-the-art dense trajectories, we introduce temporal bundles of dominant, that is most frequent, visual words. These are employed to construct a complementary action representation of ordered dominant visual word sequences, that additionally incorporates fine-grained temporal information. We exploit the introduced temporal information by applying local sub-sequence alignment that quantifies the similarity between sequences. This facilitates the fusion of our representation with the bag-of-visual-words (BoVW) representation. Our approach incorporates sequential temporal structure and results in a low-dimensional representation compared to the BoVW, while still yielding a descent result when combined with it. Experiments on the KTH, Hollywood2 and the challenging HMDB51 datasets show that the proposed framework is complementary to the BoVW representation, which discards temporal order |

I. Rodomagoulakis, N. Kardaris, V. Pitsikalis, A. Arvanitakis, P. Maragos A multimedia gesture dataset for human robot communication: Acquisition, tools and recognition results Conference Proceedings - International Conference on Image Processing, ICIP, 2016-August , 2016, ISSN: 15224880. Abstract | BibTeX | Links: [PDF] @conference{334, title = {A multimedia gesture dataset for human robot communication: Acquisition, tools and recognition results}, author = { I. Rodomagoulakis and N. Kardaris and V. Pitsikalis and A. Arvanitakis and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/publications/RKPAM_MultimedaGestureDataset-HRI_ICIP2016.pdf}, doi = {10.1109/ICIP.2016.7532923}, issn = {15224880}, year = {2016}, date = {2016-01-01}, booktitle = {Proceedings - International Conference on Image Processing, ICIP}, volume = {2016-August}, pages = {3066--3070}, abstract = {Motivated by the recent advances in human-robot interaction we present a new dataset, a suite of tools to handle it and state-of-the-art work on visual gestures and audio commands recognition. The dataset has been collected with an integrated annotation and acquisition web-interface that facilitates on-the-way temporal ground-truths for fast acquisition. The dataset includes gesture instances in which the subjects are not in strict setup positions, and contains multiple scenarios, not restricted to a single static configuration. We accompany it by a valuable suite of tools as the practical interface to acquire audio-visual data in the robotic operating system, a state-of-the-art learning pipeline to train visual gesture and audio command models, and an online gesture recognition system. Finally, we include a rich evaluation of the dataset providing rich and insightfull experimental recognition results.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Motivated by the recent advances in human-robot interaction we present a new dataset, a suite of tools to handle it and state-of-the-art work on visual gestures and audio commands recognition. The dataset has been collected with an integrated annotation and acquisition web-interface that facilitates on-the-way temporal ground-truths for fast acquisition. The dataset includes gesture instances in which the subjects are not in strict setup positions, and contains multiple scenarios, not restricted to a single static configuration. We accompany it by a valuable suite of tools as the practical interface to acquire audio-visual data in the robotic operating system, a state-of-the-art learning pipeline to train visual gesture and audio command models, and an online gesture recognition system. Finally, we include a rich evaluation of the dataset providing rich and insightfull experimental recognition results. |

2015 |

G Papageorgiou X.S. Moustris, G Pitsikalis V. Chalvatzaki, A Dometios, N Kardaris, C S Tzafestas, P Maragos User-Oriented Cognitive Interaction and Control for an Intelligent Robotic Walker Conference 17th International Conference on Social Robotics (ICSR 2015), 2015. Abstract | BibTeX | Links: [PDF] @conference{ICSR2015_2, title = {User-Oriented Cognitive Interaction and Control for an Intelligent Robotic Walker}, author = {G Papageorgiou X.S. Moustris and G Pitsikalis V. Chalvatzaki and A Dometios and N Kardaris and C S Tzafestas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/ICSR2015_2.pdf}, year = {2015}, date = {2015-10-01}, booktitle = {17th International Conference on Social Robotics (ICSR 2015)}, abstract = {Mobility impairments are prevalent in the elderly population and constitute one of the main causes related to difficulties in performing Activities of Daily Living (ADLs) and consequent reduction of quality of life. This paper reports current research work related to the control of an intelligent robotic rollator aiming to provide user-adaptive and context-aware walking assistance. To achieve such targets, a large spectrum of multimodal sensory processing and interactive control modules need to be developed and seamlessly integrated, that can, on one side track and analyse human motions and actions, in order to detect pathological situations and estimate user needs, while predicting at the same time the user (short-term or long-range) intentions in order to adapt robot control actions and supportive behaviours accordingly. User-oriented human-robot interaction and control refers to the functionalities that couple the motions, the actions and, in more general terms, the behaviours of the assistive robotic device to the user in a non-physical interaction context.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Mobility impairments are prevalent in the elderly population and constitute one of the main causes related to difficulties in performing Activities of Daily Living (ADLs) and consequent reduction of quality of life. This paper reports current research work related to the control of an intelligent robotic rollator aiming to provide user-adaptive and context-aware walking assistance. To achieve such targets, a large spectrum of multimodal sensory processing and interactive control modules need to be developed and seamlessly integrated, that can, on one side track and analyse human motions and actions, in order to detect pathological situations and estimate user needs, while predicting at the same time the user (short-term or long-range) intentions in order to adapt robot control actions and supportive behaviours accordingly. User-oriented human-robot interaction and control refers to the functionalities that couple the motions, the actions and, in more general terms, the behaviours of the assistive robotic device to the user in a non-physical interaction context. |

P Maragos, V Pitsikalis, A Katsamanis, N Kardaris, E Mavroudi, I Rodomagoulakis, A Tsiami Multimodal Sensory Processing for Human Action Recognition in Mobility Assistive Robotics Conference Proc. IROS-2015 Workshop on Cognitive Mobility Assistance Robots, Hamburg, Germany, Sep. 2015, 2015. @conference{320, title = {Multimodal Sensory Processing for Human Action Recognition in Mobility Assistive Robotics}, author = { P Maragos and V Pitsikalis and A Katsamanis and N Kardaris and E Mavroudi and I Rodomagoulakis and A Tsiami}, url = {MaragosEtAl_MultiSensoryHumanActionRecogn-Robotics_IROS2015-Workshop.pdf}, year = {2015}, date = {2015-01-01}, booktitle = {Proc. IROS-2015 Workshop on Cognitive Mobility Assistance Robots, Hamburg, Germany, Sep. 2015}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |