Biosketch

Petros Koutras was born in Athens in 1987. He received the Diploma degree in Electrical and Computer Engineering from National Technical University of Athens in July 2012. His diploma thesis, supervised by Prof. Petros Maragos, was in estimation of Eye Gaze and emotion classification based on Active Appearance Models and using Geometrical and Pattern Recognition techniques.

Petros is currently a PhD student in the CVSP Group, school of ECE, NTUA, under the supervision of Prof. Petros Maragos, working in the general area of Computer Vision and its applications. His research interests lie primary in the field of Face Modeling, Visual Saliency and Event Detection.

Publications

2019 |

P P Filntisis, N Efthymiou, P Koutras, G Potamianos, P Maragos Fusing Body Posture With Facial Expressions for Joint Recognition of Affect in Child–Robot Interaction Journal Article IEEE Robotics and Automation Letters (with IROS option), 4 (4), pp. 4011-4018, 2019. Abstract | BibTeX | Links: [PDF] @article{8769871, title = {Fusing Body Posture With Facial Expressions for Joint Recognition of Affect in Child–Robot Interaction}, author = {P P Filntisis and N Efthymiou and P Koutras and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/RAL_2019-5.pdf}, doi = {10.1109/LRA.2019.2930434}, year = {2019}, date = {2019-10-01}, journal = {IEEE Robotics and Automation Letters (with IROS option)}, volume = {4}, number = {4}, pages = {4011-4018}, abstract = {In this letter, we address the problem of multi-cue affect recognition in challenging scenarios such as child–robot interaction. Toward this goal we propose a method for automatic recognition of affect that leverages body expressions alongside facial ones, as opposed to traditional methods that typically focus only on the latter. Our deep-learning based method uses hierarchical multi-label annotations and multi-stage losses, can be trained both jointly and separately, and offers us computational models for both individual modalities, as well as for the whole body emotion. We evaluate our method on a challenging child–robot interaction database of emotional expressions collected by us, as well as on the GEneva multimodal emotion portrayal public database of acted emotions by adults, and show that the proposed method achieves significantly better results than facial-only expression baselines.}, keywords = {}, pubstate = {published}, tppubtype = {article} } In this letter, we address the problem of multi-cue affect recognition in challenging scenarios such as child–robot interaction. Toward this goal we propose a method for automatic recognition of affect that leverages body expressions alongside facial ones, as opposed to traditional methods that typically focus only on the latter. Our deep-learning based method uses hierarchical multi-label annotations and multi-stage losses, can be trained both jointly and separately, and offers us computational models for both individual modalities, as well as for the whole body emotion. We evaluate our method on a challenging child–robot interaction database of emotional expressions collected by us, as well as on the GEneva multimodal emotion portrayal public database of acted emotions by adults, and show that the proposed method achieves significantly better results than facial-only expression baselines. |

Petros Koutras, Petros Maragos SUSiNet: See, Understand and Summarize it Conference Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2019. @conference{koutras2019susinet, title = {SUSiNet: See, Understand and Summarize it}, author = {Petros Koutras and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Koutras_SUSiNet_See_Understand_and_Summarize_It_CVPRW_2019_paper.pdf}, year = {2019}, date = {2019-01-01}, booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

2018 |

N. Efthymiou, P. Koutras, P. ~P. Filntisis, G. Potamianos, P. Maragos MULTI-VIEW FUSION FOR ACTION RECOGNITION IN CHILD-ROBOT INTERACTION Conference Proc. IEEE Int'l Conf. on Image Processing, Athens, Greece, 2018. Abstract | BibTeX | Links: [PDF] @conference{efthymiou18action, title = {MULTI-VIEW FUSION FOR ACTION RECOGNITION IN CHILD-ROBOT INTERACTION}, author = { N. Efthymiou and P. Koutras and P. ~P. Filntisis and G. Potamianos and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/EfthymiouKoutrasFilntisis_MultiViewFusActRecognChildRobotInteract_ICIP18.pdf}, year = {2018}, date = {2018-10-01}, booktitle = {Proc. IEEE Int'l Conf. on Image Processing}, address = {Athens, Greece}, abstract = {Answering the challenge of leveraging computer vision methods in order to enhance Human Robot Interaction (HRI) experience, this work explores methods that can expand the capabilities of an action recognition system in such tasks. A multi-view action recognition system is proposed for integration in HRI scenarios with special users, such as children, in which there is limited data for training and many state-of-the-art techniques face difficulties. Different feature extraction approaches, encoding methods and fusion techniques are combined and tested in order to create an efficient system that recognizes children pantomime actions. This effort culminates in the integration of a robotic platform and is evaluated under an alluring Children Robot Interaction scenario.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Answering the challenge of leveraging computer vision methods in order to enhance Human Robot Interaction (HRI) experience, this work explores methods that can expand the capabilities of an action recognition system in such tasks. A multi-view action recognition system is proposed for integration in HRI scenarios with special users, such as children, in which there is limited data for training and many state-of-the-art techniques face difficulties. Different feature extraction approaches, encoding methods and fusion techniques are combined and tested in order to create an efficient system that recognizes children pantomime actions. This effort culminates in the integration of a robotic platform and is evaluated under an alluring Children Robot Interaction scenario. |

G Bouritsas, P Koutras, A Zlatintsi, Petros Maragos Multimodal Visual Concept Learning with Weakly Supervised Techniques Conference Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, Utah, USA, 2018. Abstract | BibTeX | Links: [PDF] @conference{BKA+18, title = {Multimodal Visual Concept Learning with Weakly Supervised Techniques}, author = {G Bouritsas and P Koutras and A Zlatintsi and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2018_BKZM_MultimodalVisualConceptLearningWeaklySupervisedTechniques_CVPR.pdf}, year = {2018}, date = {2018-06-01}, booktitle = {Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR)}, address = { Salt Lake City, Utah, USA}, abstract = {Despite the availability of a huge amount of video data accompanied by descriptive texts, it is not always easy to exploit the information contained in natural language in order to automatically recognize video concepts. Towards this goal, in this paper we use textual cues as means of supervision, introducing two weakly supervised techniques that extend the Multiple Instance Learning (MIL) framework: the Fuzzy Sets Multiple Instance Learning (FSMIL) and the Probabilistic Labels Multiple Instance Learning (PLMIL). The former encodes the spatio-temporal imprecision of the linguistic descriptions with Fuzzy Sets, while the latter models different interpretations of each description’s semantics with Probabilistic Labels, both formulated through a convex optimization algorithm. In addition, we provide a novel technique to extract weak labels in the presence of complex semantics, that consists of semantic similarity computations. We evaluate our methods on two distinct problems, namely face and action recognition, in the challenging and realistic setting of movies accompanied by their screenplays, contained in the COGNIMUSE database. We show that, on both tasks, our method considerably outperforms a state-of-the-art weakly supervised approach, as well as other baselines.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Despite the availability of a huge amount of video data accompanied by descriptive texts, it is not always easy to exploit the information contained in natural language in order to automatically recognize video concepts. Towards this goal, in this paper we use textual cues as means of supervision, introducing two weakly supervised techniques that extend the Multiple Instance Learning (MIL) framework: the Fuzzy Sets Multiple Instance Learning (FSMIL) and the Probabilistic Labels Multiple Instance Learning (PLMIL). The former encodes the spatio-temporal imprecision of the linguistic descriptions with Fuzzy Sets, while the latter models different interpretations of each description’s semantics with Probabilistic Labels, both formulated through a convex optimization algorithm. In addition, we provide a novel technique to extract weak labels in the presence of complex semantics, that consists of semantic similarity computations. We evaluate our methods on two distinct problems, namely face and action recognition, in the challenging and realistic setting of movies accompanied by their screenplays, contained in the COGNIMUSE database. We show that, on both tasks, our method considerably outperforms a state-of-the-art weakly supervised approach, as well as other baselines. |

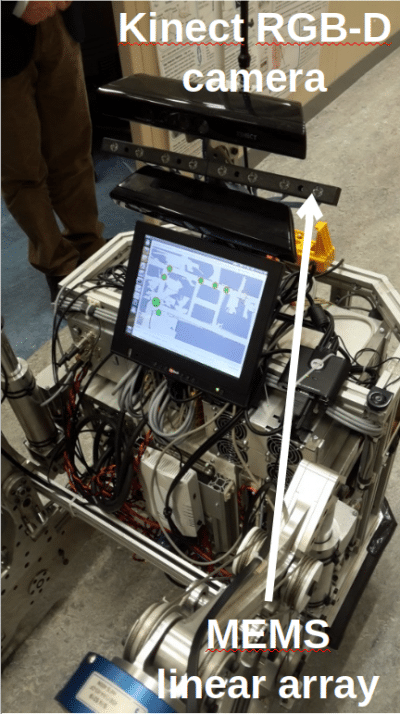

A Tsiami, P Koutras, Niki Efthymiou, P P Filntisis, G Potamianos, P Maragos Multi3: Multi-sensory Perception System for Multi-modal Child Interaction with Multiple Robots Conference IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 2018. Abstract | BibTeX | Links: [PDF] @conference{multi3, title = {Multi3: Multi-sensory Perception System for Multi-modal Child Interaction with Multiple Robots}, author = {A Tsiami and P Koutras and Niki Efthymiou and P P Filntisis and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/2018_TsiamiEtAl_Multi3-MultisensorMultimodalChildInteractMultRobots_ICRA.pdf}, year = {2018}, date = {2018-05-01}, booktitle = {IEEE International Conference on Robotics and Automation (ICRA)}, address = {Brisbane, Australia}, abstract = {Child-robot interaction is an interdisciplinary research area that has been attracting growing interest, primarily focusing on edutainment applications. A crucial factor to the successful deployment and wide adoption of such applications remains the robust perception of the child's multimodal actions, when interacting with the robot in a natural and untethered fashion. Since robotic sensory and perception capabilities are platform-dependent and most often rather limited, we propose a multiple Kinect-based system to perceive the child-robot interaction scene that is robot-independent and suitable for indoors interaction scenarios. The audio-visual input from the Kinect sensors is fed into speech, gesture, and action recognition modules, appropriately developed in this paper to address the challenging nature of child-robot interaction. For this purpose, data from multiple children are collected and used for module training or adaptation. Further, information from the multiple sensors is fused to enhance module performance. The perception system is integrated in a modular multi-robot architecture demonstrating its flexibility and scalability with different robotic platforms. The whole system, called Multi3, is evaluated, both objectively at the module level and subjectively in its entirety, under appropriate child-robot interaction scenarios containing several carefully designed games between children and robots.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Child-robot interaction is an interdisciplinary research area that has been attracting growing interest, primarily focusing on edutainment applications. A crucial factor to the successful deployment and wide adoption of such applications remains the robust perception of the child's multimodal actions, when interacting with the robot in a natural and untethered fashion. Since robotic sensory and perception capabilities are platform-dependent and most often rather limited, we propose a multiple Kinect-based system to perceive the child-robot interaction scene that is robot-independent and suitable for indoors interaction scenarios. The audio-visual input from the Kinect sensors is fed into speech, gesture, and action recognition modules, appropriately developed in this paper to address the challenging nature of child-robot interaction. For this purpose, data from multiple children are collected and used for module training or adaptation. Further, information from the multiple sensors is fused to enhance module performance. The perception system is integrated in a modular multi-robot architecture demonstrating its flexibility and scalability with different robotic platforms. The whole system, called Multi3, is evaluated, both objectively at the module level and subjectively in its entirety, under appropriate child-robot interaction scenarios containing several carefully designed games between children and robots. |

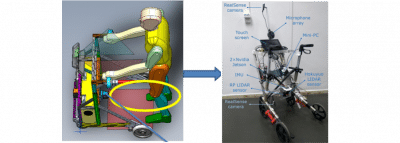

A Zlatintsi, I Rodomagoulakis, P Koutras, A ~C Dometios, V Pitsikalis, C ~S Tzafestas, P Maragos Multimodal Signal Processing and Learning Aspects of Human-Robot Interaction for an Assistive Bathing Robot Conference Proc. IEEE Int'l Conf. Acous., Speech, and Signal Processing, Calgary, Canada, 2018. Abstract | BibTeX | Links: [PDF] @conference{ZRK+18, title = {Multimodal Signal Processing and Learning Aspects of Human-Robot Interaction for an Assistive Bathing Robot}, author = {A Zlatintsi and I Rodomagoulakis and P Koutras and A ~C Dometios and V Pitsikalis and C ~S Tzafestas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/Zlatintsi+_I-SUPPORT_ICASSP18.pdf}, year = {2018}, date = {2018-04-01}, booktitle = {Proc. IEEE Int'l Conf. Acous., Speech, and Signal Processing}, address = {Calgary, Canada}, abstract = {We explore new aspects of assistive living on smart human-robot interaction (HRI) that involve automatic recognition and online validation of speech and gestures in a natural interface, providing social features for HRI. We introduce a whole framework and resources of a real-life scenario for elderly subjects supported by an assistive bathing robot, addressing health and hygiene care issues. We contribute a new dataset and a suite of tools used for data acquisition and a state-of-the-art pipeline for multimodal learning within the framework of the I-Support bathing robot, with emphasis on audio and RGB-D visual streams. We consider privacy issues by evaluating the depth visual stream along with the RGB, using Kinect sensors. The audio-gestural recognition task on this new dataset yields up to 84.5%, while the online validation of the I-Support system on elderly users accomplishes up to 84% when the two modalities are fused together. The results are promising enough to support further research in the area of multimodal recognition for assistive social HRI, considering the difficulties of the specific task.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } We explore new aspects of assistive living on smart human-robot interaction (HRI) that involve automatic recognition and online validation of speech and gestures in a natural interface, providing social features for HRI. We introduce a whole framework and resources of a real-life scenario for elderly subjects supported by an assistive bathing robot, addressing health and hygiene care issues. We contribute a new dataset and a suite of tools used for data acquisition and a state-of-the-art pipeline for multimodal learning within the framework of the I-Support bathing robot, with emphasis on audio and RGB-D visual streams. We consider privacy issues by evaluating the depth visual stream along with the RGB, using Kinect sensors. The audio-gestural recognition task on this new dataset yields up to 84.5%, while the online validation of the I-Support system on elderly users accomplishes up to 84% when the two modalities are fused together. The results are promising enough to support further research in the area of multimodal recognition for assistive social HRI, considering the difficulties of the specific task. |

A Tsiami, P P Filntisis, N Efthymiou, P Koutras, G Potamianos, P Maragos FAR-FIELD AUDIO-VISUAL SCENE PERCEPTION OF MULTI-PARTY HUMAN-ROBOT INTERACTION FOR CHILDREN AND ADULTS Conference Proc. IEEE Int'l Conf. Acous., Speech, and Signal Processing (ICASSP), Calgary, Canada, 2018. Abstract | BibTeX | Links: [PDF] @conference{tsiamifar, title = {FAR-FIELD AUDIO-VISUAL SCENE PERCEPTION OF MULTI-PARTY HUMAN-ROBOT INTERACTION FOR CHILDREN AND ADULTS}, author = {A Tsiami and P P Filntisis and N Efthymiou and P Koutras and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/2018_TsiamiEtAl_FarfieldAVperceptionHRI-ChildrenAdults_ICASSP.pdf}, year = {2018}, date = {2018-04-01}, booktitle = {Proc. IEEE Int'l Conf. Acous., Speech, and Signal Processing (ICASSP)}, address = {Calgary, Canada}, abstract = {Human-robot interaction (HRI) is a research area of growing interest with a multitude of applications for both children and adult user groups, as, for example, in edutainment and social robotics. Crucial, however, to its wider adoption remains the robust perception of HRI scenes in natural, untethered, and multi-party interaction scenarios, across user groups. Towards this goal, we investigate three focal HRI perception modules operating on data from multiple audio-visual sensors that observe the HRI scene from the far-field, thus bypassing limitations and platform-dependency of contemporary robotic sensing. In particular, the developed modules fuse intra- and/or inter-modality data streams to perform: (i) audio-visual speaker localization; (ii) distant speech recognition; and (iii) visual recognition of hand-gestures. Emphasis is also placed on ensuring high speech and gesture recognition rates for both children and adults. Development and objective evaluation of the three modules is conducted on a corpus of both user groups, collected by our far-field multi-sensory setup, for an interaction scenario of a question-answering ``guess-the-object'' collaborative HRI game with a ``Furhat'' robot. In addition, evaluation of the game incorporating the three developed modules is reported. Our results demonstrate robust far-field audio-visual perception of the multi-party HRI scene.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } Human-robot interaction (HRI) is a research area of growing interest with a multitude of applications for both children and adult user groups, as, for example, in edutainment and social robotics. Crucial, however, to its wider adoption remains the robust perception of HRI scenes in natural, untethered, and multi-party interaction scenarios, across user groups. Towards this goal, we investigate three focal HRI perception modules operating on data from multiple audio-visual sensors that observe the HRI scene from the far-field, thus bypassing limitations and platform-dependency of contemporary robotic sensing. In particular, the developed modules fuse intra- and/or inter-modality data streams to perform: (i) audio-visual speaker localization; (ii) distant speech recognition; and (iii) visual recognition of hand-gestures. Emphasis is also placed on ensuring high speech and gesture recognition rates for both children and adults. Development and objective evaluation of the three modules is conducted on a corpus of both user groups, collected by our far-field multi-sensory setup, for an interaction scenario of a question-answering ``guess-the-object'' collaborative HRI game with a ``Furhat'' robot. In addition, evaluation of the game incorporating the three developed modules is reported. Our results demonstrate robust far-field audio-visual perception of the multi-party HRI scene. |

Jack Hadfield, Petros Koutras, Niki Efthymiou, Gerasimos Potamianos, Costas S Tzafestas, Petros Maragos Object assembly guidance in child-robot interaction using RGB-D based 3d tracking Conference 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), IEEE 2018. @conference{hadfield2018object, title = {Object assembly guidance in child-robot interaction using RGB-D based 3d tracking}, author = {Jack Hadfield and Petros Koutras and Niki Efthymiou and Gerasimos Potamianos and Costas S Tzafestas and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2018_HadfieldEtAl_ObjectAssemblyGuidance-ChildRobotInteraction_IROS.pdf}, year = {2018}, date = {2018-01-01}, booktitle = {2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, pages = {347--354}, organization = {IEEE}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

2017 |

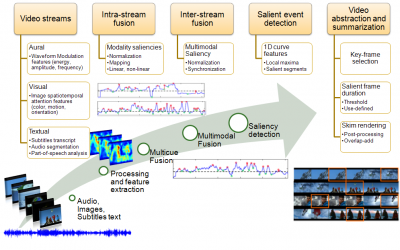

A Zlatintsi, P Koutras, G Evangelopoulos, N Malandrakis, N Efthymiou, K Pastra, A Potamianos, P Maragos COGNIMUSE: a multimodal video database annotated with saliency, events, semantics and emotion with application to summarization Journal Article EURASIP Journal on Image and Video Processing, 54 , pp. 1–24, 2017. Abstract | BibTeX | Links: [PDF] @article{ZKE+17, title = {COGNIMUSE: a multimodal video database annotated with saliency, events, semantics and emotion with application to summarization}, author = {A Zlatintsi and P Koutras and G Evangelopoulos and N Malandrakis and N Efthymiou and K Pastra and A Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/Zlatintsi+_COGNIMUSEdb_EURASIP_JIVP-2017.pdf}, doi = {doi 10.1186/s13640-017-0194}, year = {2017}, date = {2017-01-01}, journal = {EURASIP Journal on Image and Video Processing}, volume = {54}, pages = {1--24}, abstract = {Research related to computational modeling for machine-based understanding requires ground truth data for training, content analysis, and evaluation. In this paper, we present a multimodal video database, namely COGNIMUSE, annotated with sensory and semantic saliency, events, cross-media semantics, and emotion. The purpose of this database is manifold; it can be used for training and evaluation of event detection and summarization algorithms, for classification and recognition of audio-visual and cross-media events, as well as for emotion tracking. In order to enable comparisons with other computational models, we propose state-of-the-art algorithms, specifically a unified energy-based audio-visual framework and a method for text saliency computation, for the detection of perceptually salient events from videos. Additionally, a movie summarization system for the automatic production of summaries is presented. Two kinds of evaluation were performed, an objective based on the saliency annotation of the database and an extensive qualitative human evaluation of the automatically produced summaries, where we investigated what composes high-quality movie summaries, where both methods verified the appropriateness of the proposed methods. The annotation of the database and the code for the summarization system can be found at http://cognimuse.cs.ntua.gr/database.}, keywords = {}, pubstate = {published}, tppubtype = {article} } Research related to computational modeling for machine-based understanding requires ground truth data for training, content analysis, and evaluation. In this paper, we present a multimodal video database, namely COGNIMUSE, annotated with sensory and semantic saliency, events, cross-media semantics, and emotion. The purpose of this database is manifold; it can be used for training and evaluation of event detection and summarization algorithms, for classification and recognition of audio-visual and cross-media events, as well as for emotion tracking. In order to enable comparisons with other computational models, we propose state-of-the-art algorithms, specifically a unified energy-based audio-visual framework and a method for text saliency computation, for the detection of perceptually salient events from videos. Additionally, a movie summarization system for the automatic production of summaries is presented. Two kinds of evaluation were performed, an objective based on the saliency annotation of the database and an extensive qualitative human evaluation of the automatically produced summaries, where we investigated what composes high-quality movie summaries, where both methods verified the appropriateness of the proposed methods. The annotation of the database and the code for the summarization system can be found at http://cognimuse.cs.ntua.gr/database. |

A Zlatintsi, I Rodomagoulakis, V Pitsikalis, P Koutras, N Kardaris, X Papageorgiou, C Tzafestas, P Maragos Social Human-Robot Interaction for the Elderly: Two Real-life Use Cases, Conference ACM/IEEE International Conference on Human-Robot Interaction (HRI), Vienna, Austria, 2017. Abstract | BibTeX | Links: [PDF] @conference{ZRP+17, title = {Social Human-Robot Interaction for the Elderly: Two Real-life Use Cases,}, author = {A Zlatintsi and I Rodomagoulakis and V Pitsikalis and P Koutras and N Kardaris and X Papageorgiou and C Tzafestas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/publications/Zlatintsi+_SocialHRIforTheElderly_HRI-17.pdf}, year = {2017}, date = {2017-03-01}, booktitle = {ACM/IEEE International Conference on Human-Robot Interaction (HRI)}, address = {Vienna, Austria}, abstract = {We explore new aspects on assistive living via smart social human-robot interaction (HRI) involving automatic recognition of multimodal gestures and speech in a natural interface, providing social features in HRI. We discuss a whole framework of resources, including datasets and tools, briefly shown in two real-life use cases for elderly subjects: a multimodal interface of an assistive robotic rollator and an assistive bathing robot. We discuss these domain specific tasks, and open source tools, which can be used to build such HRI systems, as well as indicative results. Sharing such resources can open new perspectives in assistive HRI.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } We explore new aspects on assistive living via smart social human-robot interaction (HRI) involving automatic recognition of multimodal gestures and speech in a natural interface, providing social features in HRI. We discuss a whole framework of resources, including datasets and tools, briefly shown in two real-life use cases for elderly subjects: a multimodal interface of an assistive robotic rollator and an assistive bathing robot. We discuss these domain specific tasks, and open source tools, which can be used to build such HRI systems, as well as indicative results. Sharing such resources can open new perspectives in assistive HRI. |

2016 |

G Panagiotaropoulou, P Koutras, A Katsamanis, P Maragos, A Zlatintsi, A Protopapas, E Karavasilis, N Smyrnis fMRI-based Perceptual Validation of a computational Model for Visual and Auditory Saliency in Videos Conference Proc. {IEEE} Int'l Conf. Acous., Speech, and Signal Processing, Phoenix, AZ, USA, 2016. Abstract | BibTeX | Links: [PDF] @conference{PKK+16, title = {fMRI-based Perceptual Validation of a computational Model for Visual and Auditory Saliency in Videos}, author = {G Panagiotaropoulou and P Koutras and A Katsamanis and P Maragos and A Zlatintsi and A Protopapas and E Karavasilis and N Smyrnis}, url = {http://robotics.ntua.gr/wp-content/publications/PanagiotaropoulouEtAl_fMRI-Validation-CompAVsaliencyVideos_ICIP2016.pdf}, year = {2016}, date = {2016-09-01}, booktitle = {Proc. {IEEE} Int'l Conf. Acous., Speech, and Signal Processing}, address = {Phoenix, AZ, USA}, abstract = {In this study, we make use of brain activation data to investigate the perceptual plausibility of a visual and an auditory model for visual and auditory saliency in video processing. These models have already been successfully employed in a number of applications. In addition, we experiment with parameters, modifications and suitable fusion schemes. As part of this work, fMRI data from complex video stimuli were collected, on which we base our analysis and results. The core part of the analysis involves the use of well-established methods for the manipulation of fMRI data and the examination of variability across brain responses of different individuals. Our results indicate a success in confirming the value of these saliency models in terms of perceptual plausibility.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } In this study, we make use of brain activation data to investigate the perceptual plausibility of a visual and an auditory model for visual and auditory saliency in video processing. These models have already been successfully employed in a number of applications. In addition, we experiment with parameters, modifications and suitable fusion schemes. As part of this work, fMRI data from complex video stimuli were collected, on which we base our analysis and results. The core part of the analysis involves the use of well-established methods for the manipulation of fMRI data and the examination of variability across brain responses of different individuals. Our results indicate a success in confirming the value of these saliency models in terms of perceptual plausibility. |

Georgia Panagiotaropoulou, Petros Koutras, Athanasios Katsamanis, Petros Maragos, Athanasia Zlatintsi, Athanassios Protopapas, Efstratios Karavasilis, Nikolaos Smyrnis FMRI-based perceptual validation of a computational model for visual and auditory saliency in videos Conference Proceedings - International Conference on Image Processing, ICIP, 2016-August , 2016, ISSN: 15224880. Abstract | BibTeX | Links: [PDF] @conference{332, title = {FMRI-based perceptual validation of a computational model for visual and auditory saliency in videos}, author = { Georgia Panagiotaropoulou and Petros Koutras and Athanasios Katsamanis and Petros Maragos and Athanasia Zlatintsi and Athanassios Protopapas and Efstratios Karavasilis and Nikolaos Smyrnis}, url = {http://robotics.ntua.gr/wp-content/uploads/publications/PanagiotaropoulouEtAl_fMRI-Validation-CompAVsaliencyVideos_ICIP2016.pdf}, doi = {10.1109/ICIP.2016.7532447}, issn = {15224880}, year = {2016}, date = {2016-01-01}, booktitle = {Proceedings - International Conference on Image Processing, ICIP}, volume = {2016-August}, pages = {699--703}, abstract = {textcopyright 2016 IEEE.In this study, we make use of brain activation data to investigate the perceptual plausibility of a visual and an auditory model for visual and auditory saliency in video processing. These models have already been successfully employed in a number of applications. In addition, we experiment with parameters, modifications and suitable fusion schemes. As part of this work, fMRI data from complex video stimuli were collected, on which we base our analysis and results. The core part of the analysis involves the use of well-established methods for the manipulation of fMRI data and the examination of variability across brain responses of different individuals. Our results indicate a success in confirming the value of these saliency models in terms of perceptual plausibility.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } textcopyright 2016 IEEE.In this study, we make use of brain activation data to investigate the perceptual plausibility of a visual and an auditory model for visual and auditory saliency in video processing. These models have already been successfully employed in a number of applications. In addition, we experiment with parameters, modifications and suitable fusion schemes. As part of this work, fMRI data from complex video stimuli were collected, on which we base our analysis and results. The core part of the analysis involves the use of well-established methods for the manipulation of fMRI data and the examination of variability across brain responses of different individuals. Our results indicate a success in confirming the value of these saliency models in terms of perceptual plausibility. |

2015 |

P Koutras, A Zlatintsi, E.Iosif, A Katsamanis, P Maragos, A Potamianos Predicting Audio-Visual Salient Events Based on Visual, Audio and Text Modalities for Movie Summarization Conference Proc. {IEEE} Int'l Conf. Acous., Speech, and Signal Processing, Quebec, Canada, 2015. Abstract | BibTeX | Links: [PDF] @conference{KZI+15, title = {Predicting Audio-Visual Salient Events Based on Visual, Audio and Text Modalities for Movie Summarization}, author = {P Koutras and A Zlatintsi and E.Iosif and A Katsamanis and P Maragos and A Potamianos}, url = {http://robotics.ntua.gr/wp-content/publications/KZIKMP_MovieSum2_ICIP-2015.pdf}, year = {2015}, date = {2015-09-01}, booktitle = {Proc. {IEEE} Int'l Conf. Acous., Speech, and Signal Processing}, address = {Quebec, Canada}, abstract = {In this paper, we present a new and improved synergistic approach to the problem of audio-visual salient event detection and movie summarization based on visual, audio and text modalities. Spatio-temporal visual saliency is estimated through a perceptually inspired frontend based on 3D (space, time) Gabor filters and frame-wise features are extracted from the saliency volumes. For the auditory salient event detection we extract features based on Teager-Kaiser Energy Operator, while text analysis incorporates part-of-speech tag-ging and affective modeling of single words on the movie subtitles. For the evaluation of the proposed system, we employ an elementary and non-parametric classification technique like KNN. Detection results are reported on the MovSum database, using objective evaluations against ground-truth denoting the perceptually salient events, and human evaluations of the movie summaries. Our evaluation verifies the appropriateness of the proposed methods compared to our baseline system. Finally, our newly proposed summarization algorithm produces summaries that consist of salient and meaningful events, also improving the comprehension of the semantics.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } In this paper, we present a new and improved synergistic approach to the problem of audio-visual salient event detection and movie summarization based on visual, audio and text modalities. Spatio-temporal visual saliency is estimated through a perceptually inspired frontend based on 3D (space, time) Gabor filters and frame-wise features are extracted from the saliency volumes. For the auditory salient event detection we extract features based on Teager-Kaiser Energy Operator, while text analysis incorporates part-of-speech tag-ging and affective modeling of single words on the movie subtitles. For the evaluation of the proposed system, we employ an elementary and non-parametric classification technique like KNN. Detection results are reported on the MovSum database, using objective evaluations against ground-truth denoting the perceptually salient events, and human evaluations of the movie summaries. Our evaluation verifies the appropriateness of the proposed methods compared to our baseline system. Finally, our newly proposed summarization algorithm produces summaries that consist of salient and meaningful events, also improving the comprehension of the semantics. |

Petros Koutras, Petros Maragos Estimation of eye gaze direction angles based on active appearance models Conference 2015 IEEE International Conference on Image Processing (ICIP), 2015, ISBN: 978-1-4799-8339-1. BibTeX | Links: [Webpage] [PDF] @conference{Koutras2015, title = {Estimation of eye gaze direction angles based on active appearance models}, author = { Petros Koutras and Petros Maragos}, url = {http://ieeexplore.ieee.org/document/7351237/ http://robotics.ntua.gr/wp-content/uploads/sites/2/KoutrasMaragos_EyeGaze_ICIP15.pdf}, doi = {10.1109/ICIP.2015.7351237}, isbn = {978-1-4799-8339-1}, year = {2015}, date = {2015-09-01}, booktitle = {2015 IEEE International Conference on Image Processing (ICIP)}, pages = {2424--2428}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

A Zlatintsi, P Koutras, N Efthymiou, P Maragos, A Potamianos, K Pastra Quality Evaluation of Computational Models for Movie Summarization Conference Costa Navarino, Messinia, Greece, 2015. Abstract | BibTeX | Links: [PDF] @conference{ZKE+15, title = {Quality Evaluation of Computational Models for Movie Summarization}, author = {A Zlatintsi and P Koutras and N Efthymiou and P Maragos and A Potamianos and K Pastra}, url = {http://robotics.ntua.gr/wp-content/publications/ZlatintsiEtAl_MovieSumEval-QoMEX2015.pdf}, year = {2015}, date = {2015-05-01}, address = {Costa Navarino, Messinia, Greece}, abstract = {In this paper we present a movie summarization system and we investigate what composes high quality movie summaries in terms of user experience evaluation. We propose state-of-the-art audio, visual and text techniques for the detection of perceptually salient events from movies. The evaluation of such computational models is usually based on the comparison of the similarity between the system-detected events and some ground-truth data. For this reason, we have developed the MovSum movie database, which includes sensory and semantic saliency annotation as well as cross-media relations, for objective evaluations. The automatically produced movie summaries were qualitatively evaluated, in an extensive human evaluation, in terms of informativeness and enjoyability accomplishing very high ratings up to 80% and 90%, respectively, which verifies the appropriateness of the proposed methods.}, keywords = {}, pubstate = {published}, tppubtype = {conference} } In this paper we present a movie summarization system and we investigate what composes high quality movie summaries in terms of user experience evaluation. We propose state-of-the-art audio, visual and text techniques for the detection of perceptually salient events from movies. The evaluation of such computational models is usually based on the comparison of the similarity between the system-detected events and some ground-truth data. For this reason, we have developed the MovSum movie database, which includes sensory and semantic saliency annotation as well as cross-media relations, for objective evaluations. The automatically produced movie summaries were qualitatively evaluated, in an extensive human evaluation, in terms of informativeness and enjoyability accomplishing very high ratings up to 80% and 90%, respectively, which verifies the appropriateness of the proposed methods. |

P. Koutras, A. Zlatintsi, E. Iosif, A. Katsamanis, P. Maragos, A. Potamianos Predicting audio-visual salient events based on visual, audio and text modalities for movie summarization Conference Proceedings - International Conference on Image Processing, ICIP, 2015-December , 2015, ISSN: 15224880. @conference{307, title = {Predicting audio-visual salient events based on visual, audio and text modalities for movie summarization}, author = { P. Koutras and A. Zlatintsi and E. Iosif and A. Katsamanis and P. Maragos and A. Potamianos}, url = {http://robotics.ntua.gr/wp-content/uploads/publications/KZIKMP_MovieSum2_ICIP-2015.pdf}, doi = {10.1109/ICIP.2015.7351630}, issn = {15224880}, year = {2015}, date = {2015-01-01}, booktitle = {Proceedings - International Conference on Image Processing, ICIP}, volume = {2015-December}, pages = {4361--4365}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

2014 |

Kevis Maninis, Petros Koutras, Petros Maragos ADVANCES ON ACTION RECOGNITION IN VIDEOS USING AN INTEREST POINT DETECTOR BASED ON MULTIBAND SPATIO-TEMPORAL ENERGIES Conference Icip, 2014, ISBN: 9781479957514. @conference{164, title = {ADVANCES ON ACTION RECOGNITION IN VIDEOS USING AN INTEREST POINT DETECTOR BASED ON MULTIBAND SPATIO-TEMPORAL ENERGIES }, author = { Kevis Maninis and Petros Koutras and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/publications/ManinisKoutrasMaragos_Action_ICIP2014.pdf}, isbn = {9781479957514}, year = {2014}, date = {2014-01-01}, booktitle = {Icip}, pages = {1490--1494}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |