(+30) 210772-2360

- maragos@cs.ntua.gr

- Office 2.1.24

Biosketch

He has served as associate editor for the IEEE Transactions on Acoustics, Speech & Signal Processing, the IEEE Transactions on Pattern Analysis and Machine Intelligence, as well as editorial board member and guest editor for several other journals on signal processing, image analysis and computer vision. He has served as member of the IEEE DSP, IMDSP and MMSP technical committees, the IEEE SPS Education Board and the Conference Board. He has co-organized as general chair or co-chair several international conferences & workshops on image processing, computer vision, multimedia, and robotics, including the flagship conferences EUSIPCO-2017 and IEEE ICASSP-2023. He has also served as member of the Greek National Council for Research and Technology, and the Scientific Councils for Mathematics & Information Sciences, and recently for AI.

He is the recipient or co-recipient of several awards and distinctions for his academic work, including a 1987-1992 US National Science Foundation’s Presidential Young Investigator Award; 1988 IEEE ASSP Young Author Best Paper Award; 1994 IEEE SPS Senior Best Paper Award; 1995 IEEE W.R.G. Baker Prize Award for the most outstanding original paper in all IEEE publications; 1996 Pattern Recognition Society’s Honorable Mention Award for best paper; CVPRW-2011 Gesture Recognition Best Paper Award; ECCVW-2020 Data Modeling Challenge Award; CVPR-2022 best paper finalist; PETRA-2023 best paper for novelty award; PETRA-2025 best paper award. In 1995 he was elected Fellow of the IEEE for his contributions to the theory and application of nonlinear signal processing. He received the 2007 EURASIP Technical Achievements Award for contributions to nonlinear systems, image processing, and speech processing. In 2010 he was elected Fellow of the EURASIP for his research contributions. He has been elected IEEE Distinguished Lecturer for 2017-18. Since 2023 he has been a Life Fellow of IEEE.

Recent Research Projects

Publications

2025 |

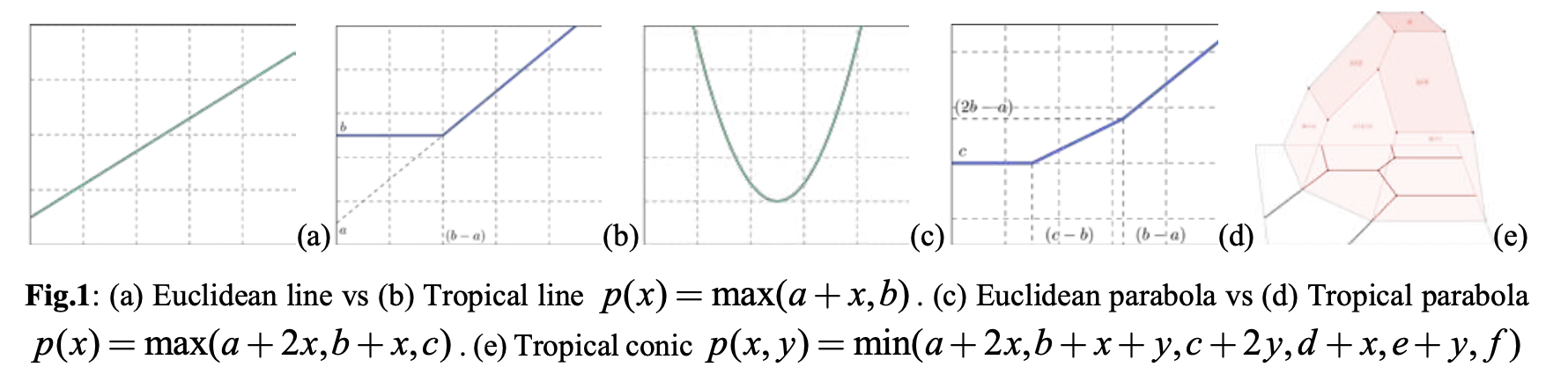

I. Kordonis, P. Maragos Revisiting Tropical Polynomial Division: Theory, Algorithms, and Application to Neural Networks Journal Article IEEE Transactions on Neural Networks and Learning Systems, 2025. @article{KordonisMaragos2025, title = {Revisiting Tropical Polynomial Division: Theory, Algorithms, and Application to Neural Networks}, author = {I. Kordonis and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_KordonisMaragos_Tropical_Division_IEEE-TNNLS_Accepted.pdf}, doi = {https://doi.org/10.1109/TNNLS.2025.3570807}, year = {2025}, date = {2025-05-30}, journal = {IEEE Transactions on Neural Networks and Learning Systems}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

D. Svyezhentsev, G. Retsinas, P. Maragos Pre-Training For Action Recognition With Automatically Generated Fractal Datasets Journal Article International Journal of Computer Vision, 2025. @article{Svyezhentsev2025, title = {Pre-Training For Action Recognition With Automatically Generated Fractal Datasets}, author = {D. Svyezhentsev and G. Retsinas and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_SvyezhentsevRetsinasMaragos_FractalPretrainForActionRecognition_IJCV_preprint.pdf}, doi = {https://doi.org/10.1007/s11263-025-02420-8}, year = {2025}, date = {2025-03-01}, journal = {International Journal of Computer Vision}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

B. Psomas, G. Retsinas, N. Efthymiadis, P. Filntisis, Y. Avrithis, P. Maragos, O. Chum, G. Tolias Instance-Level Composed Image Retrieval Conference Proc. Thirty-ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025), San Diego, USA, 2025. BibTeX | Links: [Webpage] [PDF] @conference{Psomas2025, title = {Instance-Level Composed Image Retrieval}, author = {B. Psomas, G. Retsinas, N. Efthymiadis, P. Filntisis, Y. Avrithis, P. Maragos, O. Chum, G. Tolias}, url = {https://openreview.net/forum?id=7NEP4jGKwA http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_PsomasRetsinasEtAl_Instance-Level-Composed-Image-Retrieval_NeurIPS.pdf}, year = {2025}, date = {2025-12-02}, booktitle = {Proc. Thirty-ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025)}, address = {San Diego, USA}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

T. Aravanis, P. P. Filntisis, P. Maragos, G. Retsinas Only-Style: Stylistic Consistency in Image Generation without Content Leakage Conference Proc. IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Honolulu, Hawaii, 2025. @conference{Aravanis2025, title = {Only-Style: Stylistic Consistency in Image Generation without Content Leakage}, author = {T. Aravanis, P. P. Filntisis, P. Maragos, G. Retsinas}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Aravanis_Only-Style_Stylistic_Consistency_in_Image_Generation_without_Content_Leakage_ICCVW.pdf}, year = {2025}, date = {2025-10-19}, booktitle = {Proc. IEEE/CVF International Conference on Computer Vision (ICCV) Workshops}, address = {Honolulu, Hawaii}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

K. Spathis, N. Kardaris, P. Maragos Multi-task Learning For Joint Action and Gesture Recognition Conference Proc. IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Honolulu, Hawaii, 2025. @conference{Spathis2025, title = {Multi-task Learning For Joint Action and Gesture Recognition}, author = {K. Spathis, N. Kardaris, P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Spathis_Multi-task-Learning-For-Joint-Action-and-Gesture-Recognition_ICCVW.pdf}, year = {2025}, date = {2025-10-19}, booktitle = {Proc. IEEE/CVF International Conference on Computer Vision (ICCV) Workshops}, address = {Honolulu, Hawaii}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

K. Papadimitriou, P. Filntisis, G. Retsinas, G. Potamianos, P. Maragos Seeing in 2D, Thinking in 3D: 3D Hand Mesh-Guided Feature Learning for Continuous Fingerspelling Conference Proc. IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Honolulu, Hawaii, 2025. @conference{Papadimitriou2025, title = {Seeing in 2D, Thinking in 3D: 3D Hand Mesh-Guided Feature Learning for Continuous Fingerspelling}, author = {K. Papadimitriou, P. Filntisis, G. Retsinas, G. Potamianos, P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Papadimitriou_Seeing_in_2D_Thinking_in_3D_ICCVW.pdf}, year = {2025}, date = {2025-10-19}, booktitle = {Proc. IEEE/CVF International Conference on Computer Vision (ICCV) Workshops}, address = {Honolulu, Hawaii}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

M. Glytsos, P. P. Filntisis, G. Retsinas, P. Maragos Category-Level 6D Object Pose Estimation in Agricultural Settings Using a Lattice-Deformation Framework and Diffusion-Augmented Synthetic Data Conference Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hangzhou, China, 2025. @conference{Glytsos2025, title = {Category-Level 6D Object Pose Estimation in Agricultural Settings Using a Lattice-Deformation Framework and Diffusion-Augmented Synthetic Data}, author = {M. Glytsos, P. P. Filntisis, G. Retsinas, P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Glytsos_6D-Object-PoseEstimation-in-Agriculture_LatticeDeform-DiffusionAugment-SyntheticData_IROS.pdf}, year = {2025}, date = {2025-10-19}, booktitle = {Proc. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, address = {Hangzhou, China}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

O. Konstantaropoulos, M. Khamassi, P. Maragos, G. Retsinas Push, See, Predict: Emergent Perception Through Intrinsically Motivated Play Conference Proc. 2025 IEEE Int'l Conf. on Development and Learning (ICDL), Prague, Czechia, 2025. @conference{Konstantaropoulos12025, title = {Push, See, Predict: Emergent Perception Through Intrinsically Motivated Play}, author = {O. Konstantaropoulos and M. Khamassi and P. Maragos and G. Retsinas}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_KonstantaropoulosKhamassiMaragosRetsinas_Push-See-Predict_ICDL.pdf}, doi = {10.1109/ICDL63968.2025.11204356}, year = {2025}, date = {2025-09-16}, booktitle = {Proc. 2025 IEEE Int'l Conf. on Development and Learning (ICDL)}, address = {Prague, Czechia}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

N. Giannakakis, A. Manetas, P. P. Filntisis, P. Maragos, G. Retsinas Object-Centric Action-Enhanced Representations for Robot Visuo-Motor Policy Learning Conference Proc. 2025 IEEE Int'l Conf. on Development and Learning (ICDL), Prague, Czechia, 2025. @conference{Giannakakis2025, title = {Object-Centric Action-Enhanced Representations for Robot Visuo-Motor Policy Learning}, author = {N. Giannakakis and A. Manetas and P. P. Filntisis and P. Maragos and G. Retsinas}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_GiannakakisManetas_ObjectCentricActionRepresent-RobotVisuoMotorLearn_ICDL.pdf}, doi = {10.1109/ICDL63968.2025.11204392}, year = {2025}, date = {2025-09-16}, booktitle = {Proc. 2025 IEEE Int'l Conf. on Development and Learning (ICDL)}, address = {Prague, Czechia}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

S. Zarifis, I. Kordonis, P. Maragos Diffusion-Based Forecasting for Uncertainty-Aware Model Predictive Control Conference Proc. 33rd European Signal Processing Conf.(EUSIPCO 2025), Palermo, Italy, 2025. @conference{Zarifis2025, title = {Diffusion-Based Forecasting for Uncertainty-Aware Model Predictive Control}, author = {S. Zarifis and I. Kordonis and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_ZarifisKordonisMaragos_Diffusion-Forecasting-MPC_EUSIPCO.pdf}, year = {2025}, date = {2025-09-08}, booktitle = {Proc. 33rd European Signal Processing Conf.(EUSIPCO 2025)}, address = {Palermo, Italy}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

K. Fotopoulos, C. Garoufis, P. Maragos Sparse Hybrid Linear-Morphological Networks Conference Proc. 33rd European Signal Processing Conf.(EUSIPCO 2025), Palermo, Italy, 2025. @conference{Fotopoulos2025, title = {Sparse Hybrid Linear-Morphological Networks}, author = {K. Fotopoulos and C. Garoufis and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_FotopoulosGaroufisMaragos_SparseMorphNNs_EUSIPCO.pdf}, year = {2025}, date = {2025-09-08}, booktitle = {Proc. 33rd European Signal Processing Conf.(EUSIPCO 2025)}, address = {Palermo, Italy}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P. P. Drakopoulos, G. Moustris, C. Tzafestas, P. Maragos Indoor Turn-By-Turn Navigation Assistance for Robotic Rollators Conference Proc. 18th ACM Int'l Conf. on PErvasive Technologies Related to Assistive Environments (PETRA 2025), Corfu, Greece, 2025. @conference{Drakopoulos2025, title = {Indoor Turn-By-Turn Navigation Assistance for Robotic Rollators}, author = {P. P. Drakopoulos and G. Moustris and C. Tzafestas and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_DrakopoulosMoustris_IndoorTurn-By-TurnNavigationAssistance-for-RoboticRollators_PETRA.pdf}, year = {2025}, date = {2025-06-25}, booktitle = {Proc. 18th ACM Int'l Conf. on PErvasive Technologies Related to Assistive Environments (PETRA 2025)}, address = {Corfu, Greece}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C. Pratikaki, P. P. Filntisis, A. Katsamanis, A. Roussos, P. Maragos A Transformer-Based Framework for Greek Sign Language Production using Extended Skeletal Motion Representations Conference Proc. 18th ACM Int'l Conf. on PErvasive Technologies Related to Assistive Environments (PETRA 2025), Corfu, Greece, 2025. @conference{Pratikaki2025, title = {A Transformer-Based Framework for Greek Sign Language Production using Extended Skeletal Motion Representations}, author = {C. Pratikaki and P. P. Filntisis and A. Katsamanis and A. Roussos and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Pratikaki_Transformer-for-Greek-Sign-Language-Production-using-Skeletal-Motion_PETRA.pdf}, year = {2025}, date = {2025-06-25}, booktitle = {Proc. 18th ACM Int'l Conf. on PErvasive Technologies Related to Assistive Environments (PETRA 2025)}, address = {Corfu, Greece}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

A. Androni, C. Garoufis, A. Zlatintsi, P. Maragos Feature-Level Multimodal Fusion for Relapse Detection in Patients with Psychosis Conference Proc. 25th Int'l Conf. on Digital Signal Processing (DSP 2025), Costa Navarino, Greece, 2025. @conference{Androni2025, title = {Feature-Level Multimodal Fusion for Relapse Detection in Patients with Psychosis}, author = {A. Androni and C. Garoufis and A. Zlatintsi and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Androni_FeatureLevelFusion-RelapseDetection_DSP.pdf}, year = {2025}, date = {2025-06-25}, booktitle = {Proc. 25th Int'l Conf. on Digital Signal Processing (DSP 2025)}, address = {Costa Navarino, Greece}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

D. N. Makropoulos, P. P. Filntisis, A. Prospathopoulos, D. Kassis, A. Tsiami, P. Maragos Improving Classification of Marine Mammal Vocalizations Using Vision Transformers and Phase-Related Features Conference Proc. 25th Int'l Conf. on Digital Signal Processing (DSP 2025), Costa Navarino, Greece, 2025. @conference{Makropoulos2025, title = {Improving Classification of Marine Mammal Vocalizations Using Vision Transformers and Phase-Related Features}, author = {D. N. Makropoulos and P. P. Filntisis and A. Prospathopoulos and D. Kassis and A. Tsiami and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Makropoulos_ClassificationMarineMammalVocalizations_DSP.pdf}, year = {2025}, date = {2025-06-25}, booktitle = {Proc. 25th Int'l Conf. on Digital Signal Processing (DSP 2025)}, address = {Costa Navarino, Greece}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

N. Kardaris, P. Mermigkas, G. Moustris, C. Tzafestas, P. Maragos Automated Thermal Fault Detection in Ultra-High Voltage Substation Equipment Conference Proc. IFAC Workshop on Smart Energy Systems for Efficient and Sustainable Smart Grids and Smart Cities (SENSYS 2025), Bari, Italy, 2025. @conference{Kardaris2025, title = {Automated Thermal Fault Detection in Ultra-High Voltage Substation Equipment}, author = {N. Kardaris and P. Mermigkas and G. Moustris and C. Tzafestas and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Kardaris_Automated-Thermal-Fault-Detection-in-UHVS_SENSYS.pdf}, year = {2025}, date = {2025-06-18}, booktitle = {Proc. IFAC Workshop on Smart Energy Systems for Efficient and Sustainable Smart Grids and Smart Cities (SENSYS 2025)}, address = {Bari, Italy}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P. Mermigkas, G. P. Moustris, C. S. Tzafestas, P. Maragos Constructing Visibility Maps of Optimal Positions for Robotic Inspection in Ultra-High Voltage Centers Conference Proc. 33rd Mediterranean Conference on Control and Automation (MED 2025), Tangier, Morocco, 2025. @conference{Mermigkas2025, title = {Constructing Visibility Maps of Optimal Positions for Robotic Inspection in Ultra-High Voltage Centers}, author = {P. Mermigkas and G. P. Moustris and C. S. Tzafestas and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Mermigkas_Constructing-Visibility-Maps-Optimal-Positions-UHVC_MED.pdf}, year = {2025}, date = {2025-06-10}, booktitle = {Proc. 33rd Mediterranean Conference on Control and Automation (MED 2025)}, address = {Tangier, Morocco}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P. P. Filntisis, E. Tsaprazlis, P. Oikonomou, F. Mattioli, V. G. Santucci, G. Retsinas, P. Maragos Towards Open-ended Robotic Exploration using Vision-Inspired Similarity and Foundation Models Conference Proc. 2025 IEEE International Conference on Robotics and Automation (ICRA 2025), Atlanta, USA, 2025. @conference{Filntisis2025, title = {Towards Open-ended Robotic Exploration using Vision-Inspired Similarity and Foundation Models}, author = {P. P. Filntisis and E. Tsaprazlis and P. Oikonomou and F. Mattioli and V. G. Santucci and G. Retsinas and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Filntisis_Open-endedRoboticExplorationUsingVision-InspiredSimilarity-Foundation-Models_ICRA.pdf}, year = {2025}, date = {2025-05-01}, booktitle = {Proc. 2025 IEEE International Conference on Robotics and Automation (ICRA 2025)}, address = {Atlanta, USA}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P. Oikonomou, G. Retsinas, P. Maragos, C. S. Tzafestas Proactive tactile exploration for object-agnostic shape reconstruction from minimal visual priors Conference Proc. 2025 IEEE International Conference on Robotics and Automation (ICRA 2025), Atlanta, USA, 2025. @conference{Oikonomou2025, title = {Proactive tactile exploration for object-agnostic shape reconstruction from minimal visual priors}, author = {P. Oikonomou and G. Retsinas and P. Maragos and C. S. Tzafestas}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2025_Oikonomou_Proactive_tactile_exploration_for_object_agnostic_shape_reconstruction_ICRA.pdf}, year = {2025}, date = {2025-05-01}, booktitle = {Proc. 2025 IEEE International Conference on Robotics and Automation (ICRA 2025)}, address = {Atlanta, USA}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

2024 |

A Zlatintsi, P P Filntisis, N Efthymiou, C Garoufis, G Retsinas, T Sounapoglou, I Maglogiannis, P Tsanakas, N Smyrnis, P Maragos Person Identification and Relapse Detection from Continuous Recordings of Biosignals Challenge: Overview and Results Journal Article IEEE Open Journal of Signal Processing, 2024. @article{zlatintsi2024person, title = {Person Identification and Relapse Detection from Continuous Recordings of Biosignals Challenge: Overview and Results}, author = {A Zlatintsi and P P Filntisis and N Efthymiou and C Garoufis and G Retsinas and T Sounapoglou and I Maglogiannis and P Tsanakas and N Smyrnis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Zlatintsi_e-PreventionChallengeOverview_OJSP-2024_preprint.pdf}, doi = {10.1109/OJSP.2024.3376300}, year = {2024}, date = {2024-01-01}, journal = {IEEE Open Journal of Signal Processing}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

L Liapi, E Manoudi, M Revelou, K Christodoulou, P Koutras, P Maragos, Argiro Vatakis Time perception in film viewing: A modulation of scene's duration estimates as a function of film editing Journal Article Acta Psychologica, 244 , pp. 104206, 2024. @article{liapi2024time, title = {Time perception in film viewing: A modulation of scene's duration estimates as a function of film editing}, author = {L Liapi and E Manoudi and M Revelou and K Christodoulou and P Koutras and P Maragos and Argiro Vatakis}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2024_LiapiVataki_TimePerceptionInFilmViewing-ModulationOfSceneDuration_ActaPsychol.pdf}, doi = {10.1016/j.actpsy.2024.104206}, year = {2024}, date = {2024-01-01}, journal = {Acta Psychologica}, volume = {244}, pages = {104206}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

A Manetas, P Mermigkas, P Maragos SDPL-SLAM: Introducing Lines in Dynamic Visual SLAM and Multi-Object Tracking Conference Proc. IEEE/RSJ Int'l Conf. Intelligent Robots and Systems (IROS 2024), Abu Dhabi, UAE, 2024. @conference{Manetas2024, title = {SDPL-SLAM: Introducing Lines in Dynamic Visual SLAM and Multi-Object Tracking}, author = {A Manetas and P Mermigkas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2024_Manetas_IntroduceLinesInVisualSLAM-MultiObjectTrack_IROS.pdf}, year = {2024}, date = {2024-10-01}, booktitle = {Proc. IEEE/RSJ Int'l Conf. Intelligent Robots and Systems (IROS 2024)}, address = {Abu Dhabi, UAE}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C Garoufis, A Zlatintsi, P Maragos Pre-Training Music Classification Models via Music Source Separation Conference Proc. 32nd European Signal Processing Conference (EUSIPCO 2024), Lyon, France, 2024. @conference{Garoufis2024, title = {Pre-Training Music Classification Models via Music Source Separation}, author = {C Garoufis and A Zlatintsi and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2024_GaroufisPretrainMusicClassifModelsViaMSS_EUSIPCO.pdf}, year = {2024}, date = {2024-08-01}, booktitle = {Proc. 32nd European Signal Processing Conference (EUSIPCO 2024)}, address = {Lyon, France}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

George Retsinas, Panagiotis P Filntisis, Radek Danecek, Victoria F Abrevaya, Anastasios Roussos, Timo Bolkart, Petros Maragos 3D Facial Expressions through Analysis-by-Neural-Synthesis Conference Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024. @conference{Retsinas2024, title = {3D Facial Expressions through Analysis-by-Neural-Synthesis}, author = {George Retsinas and Panagiotis P Filntisis and Radek Danecek and Victoria F Abrevaya and Anastasios Roussos and Timo Bolkart and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Retsinas_SMIRK-3D_Facial_Expressions_through_Analysis-by-Neural-Synthesis_CVPR2024.pdf}, year = {2024}, date = {2024-06-17}, booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, pages = {2490-2501}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

I Kordonis, E Theodosis, G Retsinas, P Maragos Matrix Factorization in Tropical and Mixed Tropical-Linear Algebras Conference Proc. IEEE Int’l Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024), Seoul, Korea, 2024. @conference{Kordonis2024, title = {Matrix Factorization in Tropical and Mixed Tropical-Linear Algebras}, author = {I Kordonis and E Theodosis and G Retsinas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Kordonis_MatrixFactorizationInMixedTropicalLinearAlegbras_ICASSP2024.pdf}, year = {2024}, date = {2024-04-01}, booktitle = {Proc. IEEE Int’l Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024)}, address = {Seoul, Korea}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

N Efthymiou, G Retsinas, P P Filntisis, P Maragos Augmenting Transformer Autoencoders with Phenotype Classification for Robust Detection of Psychotic Relapses Conference Proc. IEEE Int’l Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024), Seoul, Korea, 2024. @conference{Efthymiou2024, title = {Augmenting Transformer Autoencoders with Phenotype Classification for Robust Detection of Psychotic Relapses}, author = {N Efthymiou and G Retsinas and P P Filntisis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Efthymiou_TransformerPhenotypeClassif-DetectPsychoticRelapses_ICASSP2024.pdf}, year = {2024}, date = {2024-04-01}, booktitle = {Proc. IEEE Int’l Conference on Acoustics, Speech, and Signal Processing (ICASSP 2024)}, address = {Seoul, Korea}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

N. Pagliarani, C. Tzafestas, E. Papadopoulos, P. Maragos, A. Mastrogeorgiou, A. Porichis, H. Grogan, R. Ehrhardt, M. Cianchetti SoftGrip: Towards a Soft Robotic Platform for Automatized Mushroom Harvesting Conference European Robotics Forum 2024, Springer Nature, 2024. @conference{N2024, title = {SoftGrip: Towards a Soft Robotic Platform for Automatized Mushroom Harvesting}, author = {N. Pagliarani and C. Tzafestas and E. Papadopoulos and P. Maragos and A. Mastrogeorgiou and A. Porichis and H. Grogan and R. Ehrhardt and M. Cianchetti}, editor = {C Secchi and L Marconi}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2024_SoftGrip_ERF.pdf}, doi = {https://doi.org/10.1007/978-3-031-76424-0_9}, year = {2024}, date = {2024-01-01}, booktitle = {European Robotics Forum 2024}, pages = {48-52}, publisher = {Springer Nature}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

2023 |

K Papadimitriou, G Potamianos, G Sapountzaki, T Goulas, E Efthimiou, S ‐E Fotinea, P Maragos Greek sign language recognition for an education platform Journal Article Universal Access in the Information Society, 2023. @article{papadimitriou2023greek, title = {Greek sign language recognition for an education platform}, author = {K Papadimitriou and G Potamianos and G Sapountzaki and T Goulas and E Efthimiou and S ‐E Fotinea and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2023_Papadimitriou_GreekSignLangRecognForEducation_UAIS.pdf}, doi = {10.1007/s10209-023-01017-7}, year = {2023}, date = {2023-01-01}, journal = {Universal Access in the Information Society}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

G Retsinas, N Efthymiou, D Anagnostopoulou, P Maragos Mushroom Detection and Three Dimensional Pose Estimation from Multi-View Point Clouds Journal Article Sensors, 23 (7), pp. 3576, 2023. @article{retsinas2023mushroom, title = {Mushroom Detection and Three Dimensional Pose Estimation from Multi-View Point Clouds}, author = {G Retsinas and N Efthymiou and D Anagnostopoulou and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2023_Retsinas_MushromDetection-and-3D-PoseEstimation_Sensors.pdf}, doi = {10.3390/s23073576}, year = {2023}, date = {2023-01-01}, journal = {Sensors}, volume = {23}, number = {7}, pages = {3576}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

E Kalisperakis, T Karantinos, M Lazaridi, V Garyfalli, P P Filntisis, A Zlatintsi, N Efthymiou, A Mantas, L Mantonakis, T Mougiakos, I Maglogiannis, P Tsanakas, P Maragos, N Smyrnis Smartwatch digital phenotypes predict positive and negative symptom variation in a longitudinal monitoring study of patients with psychotic disorders Journal Article Frontiers in Psychiatry, 14 , 2023. @article{kalisperakis2023smartwatch, title = {Smartwatch digital phenotypes predict positive and negative symptom variation in a longitudinal monitoring study of patients with psychotic disorders}, author = {E Kalisperakis and T Karantinos and M Lazaridi and V Garyfalli and P P Filntisis and A Zlatintsi and N Efthymiou and A Mantas and L Mantonakis and T Mougiakos and I Maglogiannis and P Tsanakas and P Maragos and N Smyrnis}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2023_KalisperakisEtAl_ePrevention_FrontiersPsychiatry.pdf}, doi = {10.3389/fpsyt.2023.1024965}, year = {2023}, date = {2023-01-01}, journal = {Frontiers in Psychiatry}, volume = {14}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

E Tsaprazlis, G Smyrnis, A G Dimakis, P Maragos Enhancing CLIP with a Third Modality Conference Proc. 37th Conference on Neural Information Processing Systems (NeurIPS 2023): Workshop on Self-Supervised Learning - Theory and Practice, New Orleans, 2023. @conference{Tsaprazlis2023, title = {Enhancing CLIP with a Third Modality}, author = {E Tsaprazlis and G Smyrnis and A G Dimakis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/TsaprazlisEtAl_Enhance-CLIP-with-ThirdModality_NIPSW2023.pdf}, year = {2023}, date = {2023-12-01}, booktitle = {Proc. 37th Conference on Neural Information Processing Systems (NeurIPS 2023): Workshop on Self-Supervised Learning - Theory and Practice}, address = {New Orleans}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

A Glentis-Georgoulakis, G Retsinas, P Maragos Feather: An Elegant Solution to Effective DNN Sparsification Conference Proc. 34th Bristish Machine Vision Conference (BMVC 2023), Aberdeen, UK, 2023. @conference{Glentis-Georgoulakis2023, title = {Feather: An Elegant Solution to Effective DNN Sparsification}, author = {A Glentis-Georgoulakis and G Retsinas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/GlentisEtAl_Feather-EffectiveSolution-to-DNN-Sparsification_BMVC2023.pdf}, year = {2023}, date = {2023-11-01}, booktitle = {Proc. 34th Bristish Machine Vision Conference (BMVC 2023)}, address = {Aberdeen, UK}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

M Konstantinou, G Retsinas, P Maragos Enhancing Action Recognition in Vehicle Environments With Human Pose Information Conference Proc. Int'l Conf. on Pervasive Technologies Related to Assistive Environments (PETRA 2023), 2023. @conference{Konstantinou2023, title = {Enhancing Action Recognition in Vehicle Environments With Human Pose Information}, author = {M Konstantinou and G Retsinas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Konstantinou_ActionRecogn-in-VehicleEnvironment_PETRA2023.pdf}, year = {2023}, date = {2023-07-01}, booktitle = {Proc. Int'l Conf. on Pervasive Technologies Related to Assistive Environments (PETRA 2023)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

N Kegkeroglou, P P Filntisis, P Maragos Medical Face Masks and Emotion Recognition from the Body: Insights from a Deep Learning Perspective Conference Proc. Int'l Conf. on Pervasive Technologies Related to Assistive Environments (PETRA 2023), 2023. @conference{Kegkeroglou2023, title = {Medical Face Masks and Emotion Recognition from the Body: Insights from a Deep Learning Perspective}, author = {N Kegkeroglou and P P Filntisis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Kegkeroglou_MedicalFaceMasks-EmotionRecognFromBody_PETRA2023.pdf}, year = {2023}, date = {2023-07-01}, booktitle = {Proc. Int'l Conf. on Pervasive Technologies Related to Assistive Environments (PETRA 2023)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

D Anagnostopoulou, G Retsinas, N Efthymiou, P P Filntisis, P Maragos A Realistic Synthetic Mushroom Scenes Dataset Conference Proc. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 4th Agriculture Vision Workshop, Vancouver, Canada, 2023. BibTeX | Links: [PDF] [Poster] @conference{anagnostopoulou2023realistic, title = {A Realistic Synthetic Mushroom Scenes Dataset}, author = {D Anagnostopoulou and G Retsinas and N Efthymiou and P P Filntisis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Anagnostopoulou_CVPRW2023_paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Anagnostopoulou_CVPRW2023_poster.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 4th Agriculture Vision Workshop}, address = {Vancouver, Canada}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

D N Makropoulos, A Tsiami, A Prospathopoulos, D Kassis, A Frantzzis, E Skarsoulis, G Piperakis, P Maragos Convolutional Recurrent Neural Networks for the Classification of Cetacean Bioacoustic Patterns Conference Proc. 48th Int'l Conf. on Acoustics, Speech, and Signal Processing (ICASSP-2023), Rhodes Island, 2023. BibTeX | Links: [PDF] [Poster] @conference{makropoulos2023convolutional, title = {Convolutional Recurrent Neural Networks for the Classification of Cetacean Bioacoustic Patterns}, author = {D N Makropoulos and A Tsiami and A Prospathopoulos and D Kassis and A Frantzzis and E Skarsoulis and G Piperakis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Makropoulos_ICASSP2023_paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Makropoulos_ICASSP2023_poster.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 48th Int'l Conf. on Acoustics, Speech, and Signal Processing (ICASSP-2023)}, address = {Rhodes Island}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

D Charitou, C Garoufis, A Zlatintsi, P Maragos Exploring Polyphonic Accompaniment Generation using Generative Adversarial Networks Conference Proc. 20th Sound and Music Computing Conference (SMC 2023), Stockholm, Sweden, 2023. BibTeX | Links: [PDF] [Slides] [Poster] @conference{charitou2023exploring, title = {Exploring Polyphonic Accompaniment Generation using Generative Adversarial Networks}, author = {D Charitou and C Garoufis and A Zlatintsi and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Charitou_SMC2023_paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Charitou_SMC2023_slides.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Charitou_SMC2023_poster.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 20th Sound and Music Computing Conference (SMC 2023)}, address = {Stockholm, Sweden}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

N Efthymiou, G Retsinas, P P Filntisis, A Zlatintsi, E Kalisperakis, V Garyfalli, T Karantinos, M Lazaridi, N Smyrnis, P Maragos From Digital Phenotype Identification To Detection Of Psychotic Relapses Conference Proc. IEEE International Conference on Healthcare Informatics, Houston, TX, USA, 2023. BibTeX | Links: [PDF] [Slides] @conference{efthymiou2023digital, title = {From Digital Phenotype Identification To Detection Of Psychotic Relapses}, author = {N Efthymiou and G Retsinas and P P Filntisis and A Zlatintsi and E Kalisperakis and V Garyfalli and T Karantinos and M Lazaridi and N Smyrnis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Efthymiou_ICHI2023_paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Efthymiou_ICHI2023_slides.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. IEEE International Conference on Healthcare Informatics}, address = {Houston, TX, USA}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C Garoufis, A Zlatintsi, P Maragos Multi-Source Contrastive Learning from Musical Audio Conference Proc. 20th Sound and Music Computing Conference (SMC 2023), Stockholm, Sweden, 2023. BibTeX | Links: [PDF] [Poster] [Slides] @conference{garoufis2023multi, title = {Multi-Source Contrastive Learning from Musical Audio}, author = {C Garoufis and A Zlatintsi and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_SMC2023_paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_SMC2023_poster.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_SMC2023_slides.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 20th Sound and Music Computing Conference (SMC 2023)}, address = {Stockholm, Sweden}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

G Retsinas, N Efthymiou, P Maragos Mushroom Segmentation and 3D Pose Estimation From Point Clouds Using Fully Convolutional Geometric Features and Implicit Pose Encoding Conference Proc. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 4th Agriculture Vision Workshop, Vancouver, Canada, 2023. @conference{retsinas2023mushroomb, title = {Mushroom Segmentation and 3D Pose Estimation From Point Clouds Using Fully Convolutional Geometric Features and Implicit Pose Encoding}, author = {G Retsinas and N Efthymiou and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Retsinas_CVPRW2023_Mushroom_Segmentation_and_3D_Pose_Estimation_From_Point_Clouds_Using_paper.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 4th Agriculture Vision Workshop}, address = {Vancouver, Canada}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C O Tze, P P Filntisis, A -L Dimou, A Roussos, P Maragos Neural Sign Reenactor: Deep Photorealistic Sign Language Retargeting Conference Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), AI for Content Creation Workshop (AI4CC), Vancouver, Canada, 2023. BibTeX | Links: [PDF] [Poster] @conference{tze2023neural, title = {Neural Sign Reenactor: Deep Photorealistic Sign Language Retargeting}, author = {C O Tze and P P Filntisis and A -L Dimou and A Roussos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Tze_CVPRW2023_Neural_Sign_Reenactor_Paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Tze_CVPRW2023_Neural_Sign_Reenactor_Poster.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), AI for Content Creation Workshop (AI4CC)}, address = {Vancouver, Canada}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

G Retsinas, G Sfikas, P P Filntisis, P Maragos Newton-based Trainable Learning Rate Conference Proc. 48th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2023), Rhodes, Greece, 2023. @conference{retsinas2023newton, title = {Newton-based Trainable Learning Rate}, author = {G Retsinas and G Sfikas and P P Filntisis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Retsinas_ICASSP2023_Newton-Based-Trainable-Learning-Rate.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 48th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2023)}, address = {Rhodes, Greece}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

E Fekas, A Zlatintsi, P P Filntisis, C Garoufis, N Efthymiou, P Maragos Relapse Prediction from Long-Term Wearable Data using Self-Supervised Learning and Survival Analysis Conference Proc. 48th Int'l Conf. on Acoustics, Speech, and Signal Processing (ICASSP-2023), Rhodes Island, 2023. BibTeX | Links: [PDF] [Slides] @conference{fekas2023relapse, title = {Relapse Prediction from Long-Term Wearable Data using Self-Supervised Learning and Survival Analysis}, author = {E Fekas and A Zlatintsi and P P Filntisis and C Garoufis and N Efthymiou and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Fekas_ICASSP2023_paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Fekas_ICASSP2023_slides.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 48th Int'l Conf. on Acoustics, Speech, and Signal Processing (ICASSP-2023)}, address = {Rhodes Island}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P P Filntisis, G Retsinas, F Paraperas-Papantoniou, A Katsamanis, A Roussos, P Maragos SPECTRE: Visual Speech-Informed Perceptual 3D Facial Expression Reconstruction from Videos Conference Proc. 2023 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 5th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW), Vancouver, Canada, 2023. @conference{filntisis2023spectre, title = {SPECTRE: Visual Speech-Informed Perceptual 3D Facial Expression Reconstruction from Videos}, author = {P P Filntisis and G Retsinas and F Paraperas-Papantoniou and A Katsamanis and A Roussos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Filntisis_CVPRW2023_SPECTRE_Visual_Speech-Informed_Perceptual_3D_Facial_Expression_Reconstruction_From_Videos_paper.pdf}, year = {2023}, date = {2023-06-01}, booktitle = {Proc. 2023 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 5th Workshop and Competition on Affective Behavior Analysis in-the-wild (ABAW)}, address = {Vancouver, Canada}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P Tzathas, P Maragos, A Roussos 3D Neural Sculpting (3DNS): Editing Neural Signed Distance Functions Conference Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2023. @conference{tzathas2023neural, title = {3D Neural Sculpting (3DNS): Editing Neural Signed Distance Functions}, author = {P Tzathas and P Maragos and A Roussos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Tzathas_3D_Neural_Sculpting_3DNS_Editing_Neural_Signed_Distance_Functions_WACV2023.pdf}, year = {2023}, date = {2023-01-01}, booktitle = {Proc. IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P. Maragos Multimodal Robot Perception and Interaction Miscellaneous Keynote Talk, PETRA 2023, Kerkyra, 05 Jul., 2023. @misc{Maragos2023b, title = {Multimodal Robot Perception and Interaction}, author = {P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/PM_20230705_MRPI_KeynoteTalk_PETRA2023_Kerkyra-1.pdf}, year = {2023}, date = {2023-07-05}, howpublished = { Keynote Talk, PETRA 2023, Kerkyra, 05 Jul.}, keywords = {}, pubstate = {published}, tppubtype = {misc} } |

2022 |

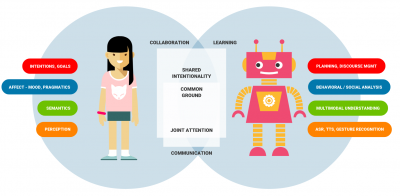

N Efthymiou, P P Filntisis, P Koutras, A Tsiami, J Hadfield, G Potamianos, P Maragos ChildBot: Multi-robot perception and interaction with children Journal Article Robotics and Autonomous Systems, 150 , pp. 103975, 2022. @article{efthymiou2022childbot, title = {ChildBot: Multi-robot perception and interaction with children}, author = {N Efthymiou and P P Filntisis and P Koutras and A Tsiami and J Hadfield and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_EfthymiouEtAl_ChildBot-MultiRobotPerception-InteractionChildren_RAS.pdf}, doi = {10.1016/j.robot.2021.103975}, year = {2022}, date = {2022-01-01}, journal = {Robotics and Autonomous Systems}, volume = {150}, pages = {103975}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

A Zlatintsi, P P Filntisis, C Garoufis, N Efthymiou, P Maragos, A Manychtas, I Maglogiannis, P Tsanakas, T Sounapoglou, E Kalisperakis, T Karantinos, M Lazaridi, V Garyfali, A Mantas, L Mantonakis, N Smyrnis e-Prevention: Advanced Support System for Monitoring and Relapse Prevention in Patients with Psychotic Disorders Analysing Long-Term Multimodal Data from Wearables and Video Captures Journal Article Sensors, 22 (19), pp. 7544, 2022. @article{zlatintsi2022e-prevention, title = {e-Prevention: Advanced Support System for Monitoring and Relapse Prevention in Patients with Psychotic Disorders Analysing Long-Term Multimodal Data from Wearables and Video Captures}, author = {A Zlatintsi and P P Filntisis and C Garoufis and N Efthymiou and P Maragos and A Manychtas and I Maglogiannis and P Tsanakas and T Sounapoglou and E Kalisperakis and T Karantinos and M Lazaridi and V Garyfali and A Mantas and L Mantonakis and N Smyrnis}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_ZlatintsiEtAl_EPrevention_SENSORS2022.pdf}, doi = {10.3390/s22197544}, year = {2022}, date = {2022-01-01}, journal = {Sensors}, volume = {22}, number = {19}, pages = {7544}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

R Dromnelle, E Renaudo, M Chetouani, P Maragos, R Chatila, B Girard, M Khamassi Reducing Computational Cost During Robot Navigation and Human-Robot Interaction with a Human-Inspired Reinforcement Learning Architectures Journal Article International Journal of Social Robotics, 2022. @article{dromnelle2022reducing, title = {Reducing Computational Cost During Robot Navigation and Human-Robot Interaction with a Human-Inspired Reinforcement Learning Architectures}, author = {R Dromnelle and E Renaudo and M Chetouani and P Maragos and R Chatila and B Girard and M Khamassi}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_DromnelleEtAl_RL-ReduceComputationRobotNavigation-HRI_IJSR.pdf}, doi = {10.1007/s12369-022-00942-6}, year = {2022}, date = {2022-01-01}, journal = {International Journal of Social Robotics}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

N Tsilivis, A Tsiamis, P Maragos Toward a Sparsity Theory on Weighted Lattices Journal Article Journal of Mathematical Imaging and Vision, 2022. @article{tsilivis2022toward, title = {Toward a Sparsity Theory on Weighted Lattices}, author = {N Tsilivis and A Tsiamis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_TsilivisTsiamisMaragos_SparsityTheoryOnWeightedLattices_JMIV.pdf}, doi = {10.1007/s10851-022-01075-1}, year = {2022}, date = {2022-01-01}, journal = {Journal of Mathematical Imaging and Vision}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

D Anagnostopoulou, N Efthymiou, C Papailiou, P Maragos Child Engagement Estimation in Heterogeneous Child-Robot Interactions Using Spatiotemporal Visual Cues Conference Proc. 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022), Kyoto, Japan, 2022. @conference{anagnostopoulou2022child, title = {Child Engagement Estimation in Heterogeneous Child-Robot Interactions Using Spatiotemporal Visual Cues}, author = {D Anagnostopoulou and N Efthymiou and C Papailiou and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Anagnostopoulou_IROS2022_paper.pdf}, year = {2022}, date = {2022-10-01}, booktitle = {Proc. 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022)}, address = {Kyoto, Japan}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

M Panagiotou, A Zlatintsi, P P Filntisis, A J Roumeliotis, N Efthymiou, P Maragos A Comparative Study of Autoencoder Architectures for Mental Health Analysis using Wearable Sensors Data Conference Proc. 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 2022. BibTeX | Links: [PDF] [Slides] @conference{panagiotou2022comparative, title = {A Comparative Study of Autoencoder Architectures for Mental Health Analysis using Wearable Sensors Data}, author = {M Panagiotou and A Zlatintsi and P P Filntisis and A J Roumeliotis and N Efthymiou and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_PanagiotouEtAl_ComStudyAutoencodersMentalHealthWearables_EUSIPCO2022.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Panagiotou_EUSIPCO2022_Presentation_slides.pdf}, year = {2022}, date = {2022-09-01}, booktitle = {Proc. 30th European Signal Processing Conference (EUSIPCO)}, address = {Belgrade, Serbia}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C Garoufis, A Zlatintsi, P P Filntisis, N Efthymiou, E Kalisperakis, T Karantinos, V Garyfalli, M Lazaridi, N Smyrnis, P Maragos Towards Unsupervised Subject-Independent Speech-Based Relapse Detection in Patients with Psychosis using Variational Autoencoders Conference Proc. 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 2022. BibTeX | Links: [PDF] [Slides] @conference{garoufis2022towards, title = {Towards Unsupervised Subject-Independent Speech-Based Relapse Detection in Patients with Psychosis using Variational Autoencoders}, author = {C Garoufis and A Zlatintsi and P P Filntisis and N Efthymiou and E Kalisperakis and T Karantinos and V Garyfalli and M Lazaridi and N Smyrnis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_GaroufisEtAl_UnsupervisedSpeechBasedRelapseDetectionVAES_EUSIPCO2022.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_SubjectIndependentRelapseDetectionAudioVAEs_EUSIPCO22_slides.pdf}, year = {2022}, date = {2022-09-01}, booktitle = {Proc. 30th European Signal Processing Conference (EUSIPCO)}, address = {Belgrade, Serbia}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P Papantonakis, C Garoufis, P Maragos Multi-band Masking for Waveform-based Singing Voice Separation Conference Proc. 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 2022. BibTeX | Links: [PDF] [Poster] @conference{papantonakis2022multi, title = {Multi-band Masking for Waveform-based Singing Voice Separation}, author = {P Papantonakis and C Garoufis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Papantonakis_MultibandMaskingSVS_EUSIPCO22_Paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Papantonakis_MultibandMaskingSVS_EUSIPCO22_Poster.pdf}, year = {2022}, date = {2022-08-01}, booktitle = {Proc. 30th European Signal Processing Conference (EUSIPCO)}, address = {Belgrade, Serbia}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

I Asmanis, P Mermigkas, G Chalvatzaki, J Peters, P Maragos A Semantic Enhancement of Unified Geometric Representations for Improving Indoor Visual SLAM Conference Proc. 19th Int'l Conf. on Ubiquitous Robots (UR 2022), Jeju, Korea, 2022. @conference{asmanis2022semantic, title = {A Semantic Enhancement of Unified Geometric Representations for Improving Indoor Visual SLAM}, author = {I Asmanis and P Mermigkas and G Chalvatzaki and J Peters and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_AsmanisMermigkas_SemanticEnhanceGeomRepres-IndoorVisualSLAM_UR.pdf}, year = {2022}, date = {2022-07-01}, booktitle = {Proc. 19th Int'l Conf. on Ubiquitous Robots (UR 2022)}, address = {Jeju, Korea}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

M. Kaliakatsos-Papakostas, G. Bastas, D. Makris, D. Herremans, V. Katsouros, P. Maragos A Machine Learning Approach for MIDI to Guitar Tablature Conversion Conference Proc. 19th Sound and Music Computing Conference (SMC 2022), Saint-Ιtienne, France, 2022. @conference{Kaliakatsos-Papakostas2022, title = {A Machine Learning Approach for MIDI to Guitar Tablature Conversion}, author = {M. Kaliakatsos-Papakostas and G. Bastas and D. Makris and D. Herremans and V. Katsouros and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2022_Kaliakatsos-Papakostas_ML-MIDI-GuitarTablatureTranscription_SMC-1.pdf}, doi = {10.5281/zenodo.6573024}, year = {2022}, date = {2022-06-04}, booktitle = {Proc. 19th Sound and Music Computing Conference (SMC 2022)}, address = {Saint-Ιtienne, France}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

G Retsinas, P Filntisis, N Kardaris, P Maragos Attribute-based Gesture Recognition: Generalization to Unseen Classes Conference Proc. 14th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP 2022), Nafplio, Greece, 2022. @conference{retsinas2022attribute, title = {Attribute-based Gesture Recognition: Generalization to Unseen Classes}, author = {G Retsinas and P Filntisis and N Kardaris and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Retsinas_IVMSP2022_paper.pdf}, year = {2022}, date = {2022-06-01}, booktitle = {Proc. 14th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP 2022)}, address = {Nafplio, Greece}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C O Tze, P Filntisis, A Roussos, P Maragos Cartoonized Anonymization of Sign Language Videos Conference Proc. 14th IEEE Image, Video, and Multidimensional Signal Processing Workshop (IVMSP 2022), Nafplio, Greece, 2022. @conference{tze2022cartoonized, title = {Cartoonized Anonymization of Sign Language Videos}, author = {C O Tze and P Filntisis and A Roussos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Tze_IVMSP2022_Cartoonized-Anonymization-Sign-Videos_paper.pdf}, year = {2022}, date = {2022-06-01}, booktitle = {Proc. 14th IEEE Image, Video, and Multidimensional Signal Processing Workshop (IVMSP 2022)}, address = {Nafplio, Greece}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

F Paraperas-Papantoniou, P P Filntisis, P Maragos, A Roussos Neural Emotion Director: Speech-preserving semantic control of facial expressions in “in-the-wild” videos Conference Proc. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, USA, 2022, (CVPR-2022 Best Paper Finalist). BibTeX | Links: [PDF] [Poster] [Supp] @conference{paraperas2022neural, title = {Neural Emotion Director: Speech-preserving semantic control of facial expressions in “in-the-wild” videos}, author = {F Paraperas-Papantoniou and P P Filntisis and P Maragos and A Roussos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Paraperas_NED-SpeechPreservingSemanticControlFacialExpressions_CVPR2022_paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Paraperas_cvpr2022_NED_poster.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Paraperas_NED_CVPR2022_supplemental-material.pdf}, year = {2022}, date = {2022-06-01}, booktitle = {Proc. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, address = {New Orleans, USA}, note = {CVPR-2022 Best Paper Finalist}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

G Bastas, S Koutoupis, M K.-Papakostas, V Katsouros, P Maragos A Few-sample Strategy for Guitar Tablature Transcription Based on Inharmonicity Analysis and Playability Constraints Conference Proc. 47th IEEE Int’l Conf. on Acoustics, Speech and Signal Processing (ICASSP-2022), 2022. @conference{bastas2022few, title = {A Few-sample Strategy for Guitar Tablature Transcription Based on Inharmonicity Analysis and Playability Constraints}, author = {G Bastas and S Koutoupis and M K.-Papakostas and V Katsouros and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/BastasKoutoupis_TablatureTranscription_ICASSP22_Paper.pdf}, year = {2022}, date = {2022-05-01}, booktitle = {Proc. 47th IEEE Int’l Conf. on Acoustics, Speech and Signal Processing (ICASSP-2022)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

K Avramidis, C Garoufis, A Zlatintsi, P Maragos Enhancing Affective Representations of Music-Induced EEG through Multimodal Supervision and Latent Domain Adaptation Conference Proc. 47th IEEE Int’l Conf. on Acoustics, Speech and Signal Processing (ICASSP-2022), 2022. BibTeX | Links: [PDF] [Poster] @conference{avramidis2022enhancing, title = {Enhancing Affective Representations of Music-Induced EEG through Multimodal Supervision and Latent Domain Adaptation}, author = {K Avramidis and C Garoufis and A Zlatintsi and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Avramidis_MusicEEGCrossModal_ICASSP22_Paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Avramidis_ICASSP2022-poster.pdf}, year = {2022}, date = {2022-05-01}, booktitle = {Proc. 47th IEEE Int’l Conf. on Acoustics, Speech and Signal Processing (ICASSP-2022)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

M Parelli, K Papadimitriou, G Potamianos, G Pavlakos, P Maragos Spatio-Temporal Graph Convolutional Networks for Continuous Sign Language Recognition Conference Proc. 47th IEEE Int’l Conf. on Acoustics, Speech and Signal Processing (ICASSP-2022), 2022. @conference{parelli2022spatio, title = {Spatio-Temporal Graph Convolutional Networks for Continuous Sign Language Recognition}, author = {M Parelli and K Papadimitriou and G Potamianos and G Pavlakos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/ParelliPapadimitriou_SignLanguageRecognitionGCNNs_ICASSP22_Paper.pdf}, year = {2022}, date = {2022-05-01}, booktitle = {Proc. 47th IEEE Int’l Conf. on Acoustics, Speech and Signal Processing (ICASSP-2022)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P Misiakos, G Smyrnis, G Retsinas, P Maragos Neural Network Approximation based on Hausdorff Distance of Tropical Zonotopes Conference Proc. Int’l Conf. on Learning Representations (ICLR 2022), 2022. BibTeX | Links: [PDF] [Poster] [Slides] @conference{misiakos2022neural, title = {Neural Network Approximation based on Hausdorff Distance of Tropical Zonotopes}, author = {P Misiakos and G Smyrnis and G Retsinas and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Misiakos_ICLR2022_TropicalGeometry_paper.pdf https://iclr.cc/virtual/2022/poster/5971 http://robotics.ntua.gr/wp-content/uploads/sites/2/Misiakos_ICLR2022_TropicalGeometry_slides.pdf}, year = {2022}, date = {2022-01-01}, booktitle = {Proc. Int’l Conf. on Learning Representations (ICLR 2022)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

2021 |

Petros Maragos, Vasileios Charisopoulos, Emmanouil Theodosis Tropical Geometry and Machine Learning Journal Article Proceedings of the IEEE, 109 (5), pp. 728-755, 2021. @article{MCT21, title = {Tropical Geometry and Machine Learning}, author = {Petros Maragos and Vasileios Charisopoulos and Emmanouil Theodosis}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/MaragosCharisopoulosTheodosis_TGML_PIEEE20211.pdf}, doi = {10.1109/JPROC.2021.3065238}, year = {2021}, date = {2021-12-31}, journal = {Proceedings of the IEEE}, volume = {109}, number = {5}, pages = {728-755}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

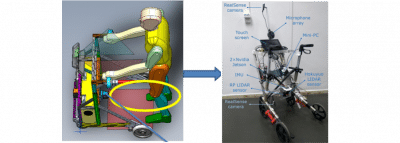

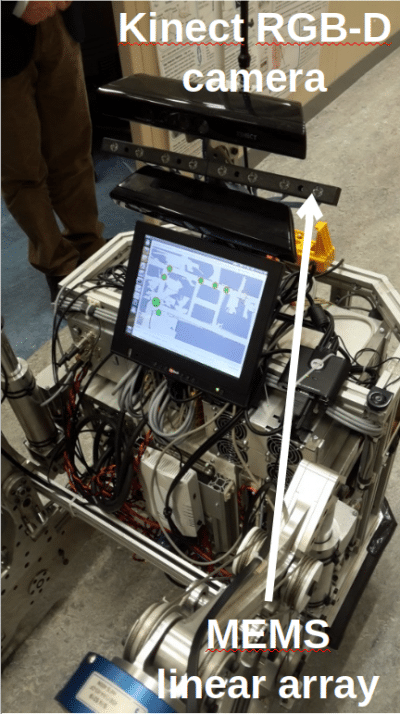

George Moustris, Nikolaos Kardaris, Antigoni Tsiami, Georgia Chalvatzaki, Petros Koutras, Athanasios Dometios, Paris Oikonomou, Costas Tzafestas, Petros Maragos, Eleni Efthimiou, Xanthi Papageorgiou, Stavroula-Evita Fotinea, Yiannis Koumpouros, Anna Vacalopoulou, Effie Papageorgiou, Alexandra Karavasili, Foteini Koureta, Dimitris Dimou, Alexandros Nikolakakis, Konstantinos Karaiskos, Panagiotis Mavridis The i-Walk Lightweight Assistive Rollator: First Evaluation Study Journal Article Frontiers in Robotics and AI, 8 , pp. 272, 2021, ISSN: 2296-9144. Abstract | BibTeX | Links: [PDF] @article{10.3389/frobt.2021.677542, title = {The i-Walk Lightweight Assistive Rollator: First Evaluation Study}, author = {George Moustris and Nikolaos Kardaris and Antigoni Tsiami and Georgia Chalvatzaki and Petros Koutras and Athanasios Dometios and Paris Oikonomou and Costas Tzafestas and Petros Maragos and Eleni Efthimiou and Xanthi Papageorgiou and Stavroula-Evita Fotinea and Yiannis Koumpouros and Anna Vacalopoulou and Effie Papageorgiou and Alexandra Karavasili and Foteini Koureta and Dimitris Dimou and Alexandros Nikolakakis and Konstantinos Karaiskos and Panagiotis Mavridis}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/frobt-08-677542.pdf}, doi = {10.3389/frobt.2021.677542}, issn = {2296-9144}, year = {2021}, date = {2021-12-30}, journal = {Frontiers in Robotics and AI}, volume = {8}, pages = {272}, abstract = {Robots can play a significant role as assistive devices for people with movement impairment and mild cognitive deficit. In this paper we present an overview of the lightweight i-Walk intelligent robotic rollator, which offers cognitive and mobility assistance to the elderly and to people with light to moderate mobility impairment. The utility, usability, safety and technical performance of the device is investigated through a clinical study, which took place at a rehabilitation center in Greece involving real patients with mild to moderate cognitive and mobility impairment. This first evaluation study comprised a set of scenarios in a number of pre-defined use cases, including physical rehabilitation exercises, as well as mobility and ambulation involved in typical daily living activities of the patients. The design and implementation of this study is discussed in detail, along with the obtained results, which include both an objective and a subjective evaluation of the system operation, based on a set of technical performance measures and a validated questionnaire for the analysis of qualitative data, respectively. The study shows that the technical modules performed satisfactory under real conditions, and that the users generally hold very positive views of the platform, considering it safe and reliable.}, keywords = {}, pubstate = {published}, tppubtype = {article} } Robots can play a significant role as assistive devices for people with movement impairment and mild cognitive deficit. In this paper we present an overview of the lightweight i-Walk intelligent robotic rollator, which offers cognitive and mobility assistance to the elderly and to people with light to moderate mobility impairment. The utility, usability, safety and technical performance of the device is investigated through a clinical study, which took place at a rehabilitation center in Greece involving real patients with mild to moderate cognitive and mobility impairment. This first evaluation study comprised a set of scenarios in a number of pre-defined use cases, including physical rehabilitation exercises, as well as mobility and ambulation involved in typical daily living activities of the patients. The design and implementation of this study is discussed in detail, along with the obtained results, which include both an objective and a subjective evaluation of the system operation, based on a set of technical performance measures and a validated questionnaire for the analysis of qualitative data, respectively. The study shows that the technical modules performed satisfactory under real conditions, and that the users generally hold very positive views of the platform, considering it safe and reliable. |

Nikos Melanitis, Petros Maragos A linear method for camera pair self-calibration Journal Article Computer Vision and Image Understanding, 210 , pp. 103223, 2021. @article{MeMa21, title = {A linear method for camera pair self-calibration}, author = {Nikos Melanitis and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_MelanitisMaragos_LinearCameraSelfPairCalibration_CVIU.pdf}, doi = {https://doi.org/10.1016/j.cviu.2021.103223}, year = {2021}, date = {2021-09-01}, journal = {Computer Vision and Image Understanding}, volume = {210}, pages = {103223}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

N Efthymiou, P P Filntisis, G Potamianos, P Maragos Visual Robotic Perception System with Incremental Learning for Child–Robot Interaction Scenarios Journal Article Technologies, 9 (4), pp. 86, 2021. @article{efthymiou2021visual, title = {Visual Robotic Perception System with Incremental Learning for Child–Robot Interaction Scenarios}, author = {N Efthymiou and P P Filntisis and G Potamianos and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_EfthymiouEtAl_VisualRobotPerceptionSystem-ChildRobotInteract_Technologies-1.pdf}, doi = {10.3390/technologies9040086}, year = {2021}, date = {2021-01-01}, journal = {Technologies}, volume = {9}, number = {4}, pages = {86}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

M. Diomataris, N. Gkanatsios, V. Pitsikalis, P. Maragos Grounding Consistency: Distilling Spatial Common Sense for Precise Visual Relationship Detection Conference Proceedings of International Conference on Computer Vision (ICCV-2021), 2021. @conference{Diomataris2021, title = {Grounding Consistency: Distilling Spatial Common Sense for Precise Visual Relationship Detection}, author = {M. Diomataris, N. Gkanatsios, V. Pitsikalis and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/DiomatarisEtAl_GroundingConsistency-VisualRelationsDetection_ICCV2021.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/DiomatarisEtAl_GroundingConsistency-VisualRelationsDetection_ICCV2021_supp.pdf}, year = {2021}, date = {2021-12-31}, booktitle = {Proceedings of International Conference on Computer Vision (ICCV-2021)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P Antoniadis, P P Filntisis, P Maragos Exploiting Emotional Dependencies with Graph Convolutional Networks for Facial Expression Recognition Conference Proc. 16th IEEE Int’l Conf. on Automatic Face and Gesture Recognition (FG-2021), 2021. @conference{Antoniadis2021, title = {Exploiting Emotional Dependencies with Graph Convolutional Networks for Facial Expression Recognition}, author = {P Antoniadis and P P Filntisis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_AntoniadisEtAl_Emotion-GCN-FacialExpressionRecogn_FG-1.pdf}, year = {2021}, date = {2021-12-01}, booktitle = {Proc. 16th IEEE Int’l Conf. on Automatic Face and Gesture Recognition (FG-2021)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

I Pikoulis, P P Filntisis, P Maragos Leveraging Semantic Scene Characteristics and Multi-Stream Convolutional Architectures in a Contextual Approach for Video-Based Visual Emotion Recognition in the Wild Conference Proc. 16th IEEE Int’l Conf. on Automatic Face and Gesture Recognition (FG-2021), 2021. @conference{Pikoulis2021, title = {Leveraging Semantic Scene Characteristics and Multi-Stream Convolutional Architectures in a Contextual Approach for Video-Based Visual Emotion Recognition in the Wild}, author = {I Pikoulis and P P Filntisis and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_PikoulisEtAl_VideoEmotionRecognInTheWild-SemanticMultiStreamContext_FG-1.pdf}, year = {2021}, date = {2021-12-01}, booktitle = {Proc. 16th IEEE Int’l Conf. on Automatic Face and Gesture Recognition (FG-2021)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

V. Vasileiou, N. Kardaris, P. Maragos Exploring Temporal Context and Human Movement Dynamics for Online Action Detection in Videos Conference Proc. 29th European Signal Processing Conference (EUSIPCO 2021), Dublin, Ireland, 2021. BibTeX | Links: [PDF] [Slides] @conference{Vasileiou2021, title = {Exploring Temporal Context and Human Movement Dynamics for Online Action Detection in Videos}, author = {V. Vasileiou, N. Kardaris and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Vasileiou_EUSIPCO21_Enhancing_temporal_context_for_online_action_detection_in_videos_Paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Vasileiou_EUSIPCO21_presentation_slides.pdf}, year = {2021}, date = {2021-08-31}, booktitle = {Proc. 29th European Signal Processing Conference (EUSIPCO 2021)}, address = {Dublin, Ireland}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P. P. Filntisis, N. Efthymiou, G. Potamianos,, P. Maragos An Audiovisual Child Emotion Recognition System for Child-Robot Interaction Applications Conference Proc. 29th European Signal Processing Conference (EUSIPCO 2021), Dublin, Ireland, 2021. BibTeX | Links: [Slides] [PDF] @conference{Filntisis2021, title = {An Audiovisual Child Emotion Recognition System for Child-Robot Interaction Applications}, author = {P. P. Filntisis, N. Efthymiou, G. Potamianos, and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Filntisis_EUSIPCO2021_ChildEmotionRecogn_presentation_slides.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_FilntisisEtAl_AV-ChildEmotionRecognSystem-ChildRobotInteract_EUSIPCO.pdf}, year = {2021}, date = {2021-08-31}, booktitle = {Proc. 29th European Signal Processing Conference (EUSIPCO 2021)}, address = {Dublin, Ireland}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C. Garoufis, A. Zlatintsi,, P. Maragos HTMD-NET: A Hybrid Masking-Denoising Approach to Time-Domain Monaural Singing Voice Separation Conference Proc. 29th European Signal Processing Conference (EUSIPCO 2021), Dublin, Ireland, 2021. BibTeX | Links: [Slides] [PDF] @conference{Garoufis2021, title = {HTMD-NET: A Hybrid Masking-Denoising Approach to Time-Domain Monaural Singing Voice Separation}, author = {C. Garoufis, A. Zlatintsi, and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_EUSIPCO2021_HTMDNet_slides.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_EUSIPCO2021_HTMDNet1_Paper.pdf}, year = {2021}, date = {2021-08-31}, booktitle = {Proc. 29th European Signal Processing Conference (EUSIPCO 2021)}, address = {Dublin, Ireland}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

K. Avramidis, A. Zlatintsi, C. Garoufis,, P. Maragos Multiscale Fractal Analysis on EEG Signals for Music-Induced Emotion Recognition Conference Proc. 29th European Signal Processing Conference (EUSIPCO 2021), Dublin, Ireland, 2021. BibTeX | Links: [Slides] [PDF] @conference{Avramidis2021, title = {Multiscale Fractal Analysis on EEG Signals for Music-Induced Emotion Recognition}, author = {K. Avramidis, A. Zlatintsi, C. Garoufis, and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Avramidis_EUSIPCO2021_MFA-EEG-MusicEmotion_presentation_slides.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_AvramidisEtAl_MFA-EEG_MusicEmotionRecogn_EUSIPCO.pdf}, year = {2021}, date = {2021-08-31}, booktitle = {Proc. 29th European Signal Processing Conference (EUSIPCO 2021)}, address = {Dublin, Ireland}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

P. Giannoulis, G. Potamianos,, P. Maragos Overlapped Sound Event Classification via Multi- Channel Sound Separation Network Conference Proc. 29th European Signal Processing Conference (EUSIPCO 2021), Dublin, Ireland, 2021. BibTeX | Links: [PDF] [Slides] @conference{Giannoulis2021, title = {Overlapped Sound Event Classification via Multi- Channel Sound Separation Network}, author = {P. Giannoulis, G. Potamianos, and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Giannoulis_EUSIPCO21_OverlapSoundEventClassif_Paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Giannoulis_EUSIPCO21_presentation_slides.pdf}, year = {2021}, date = {2021-08-31}, booktitle = {Proc. 29th European Signal Processing Conference (EUSIPCO 2021)}, address = {Dublin, Ireland}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

C. Garoufis, A. Zlatintsi, P. P. Filntisis, N. Efthymiou, E. Kalisperakis, V. Garyfalli, T. Karantinos, L. Mantonakis, N. Smyrnis, P. Maragos An Unsupervised Learning Approach for Detecting Relapses from Spontaneous Speech in Patients with Psychosis Conference Proc. IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI-2021), 2021. BibTeX | Links: [PDF] [Poster] @conference{Garoufis2021b, title = {An Unsupervised Learning Approach for Detecting Relapses from Spontaneous Speech in Patients with Psychosis}, author = {C. Garoufis, A. Zlatintsi, P. P. Filntisis, N. Efthymiou, E. Kalisperakis, V. Garyfalli, T. Karantinos, L. Mantonakis, N. Smyrnis and P. Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_BHI2021_UnsupervisedLearningRelapseDetection_Paper.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Garoufis_BHI21_Poster.pdf}, year = {2021}, date = {2021-07-31}, booktitle = {Proc. IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI-2021)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

G Bastas, A Gkiokas, V Katsouros, P Maragos Convolutional Networks For Visual Onset Detection in the Context of Bowed String Instrument Performances Conference Proc. 18th Sound and Music Computing Conference (SMC 2021), 2021. @conference{Bastas2021, title = {Convolutional Networks For Visual Onset Detection in the Context of Bowed String Instrument Performances}, author = {G Bastas and A Gkiokas and V Katsouros and P Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_Bastas_ConvNetsVisualOnsetDetectBowedStringInstrument_SMC.pdf}, year = {2021}, date = {2021-06-29}, booktitle = {Proc. 18th Sound and Music Computing Conference (SMC 2021)}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

Dafni Anagnostopoulou, Niki Efthymiou, Christina Papailiou, Petros Maragos Engagement Estimation During Child Robot Interaction Using DeepConvolutional Networks Focusing on ASD Children Conference Proc. IEEE Int'l Conf. Robotics and Automation (ICRA-2021), Xi'an, 2021. BibTeX | Links: [PDF] [Video] [Slides] @conference{AnagnostopoulouICRA2021, title = {Engagement Estimation During Child Robot Interaction Using DeepConvolutional Networks Focusing on ASD Children}, author = {Dafni Anagnostopoulou and Niki Efthymiou and Christina Papailiou and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_Anagnostopoulou_EngagementEstimationChildRobotInteraction_ICRA.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Anagnostopoulou_ICRA21_presentation1.mp4 http://robotics.ntua.gr/wp-content/uploads/sites/2/Anagnostopoulou_ICRA21_slides1.pdf}, year = {2021}, date = {2021-06-01}, booktitle = {Proc. IEEE Int'l Conf. Robotics and Automation (ICRA-2021)}, address = {Xi'an}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

Agelos Kratimenos, Georgios Pavlakos, Petros Maragos Independent Sign Language Recognition with 3D Body, Hands, and Face Reconstruction Conference Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021), Toronto, 2021. BibTeX | Links: [PDF] [Slides] [Video] [Poster] @conference{Kratimenos_icassp21, title = {Independent Sign Language Recognition with 3D Body, Hands, and Face Reconstruction}, author = {Agelos Kratimenos and Georgios Pavlakos and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_KratimenosPavlakosMaragos_IsolatedSignLangRecogn3Dreconstruct_ICASSP.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Kratimenos_ICASSP2021_slides.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Kratimenos_ICASSP2021_video.mp4 http://robotics.ntua.gr/wp-content/uploads/sites/2/Kratimenos_ICASSP2021_poster.pdf }, year = {2021}, date = {2021-06-01}, booktitle = {Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021)}, address = {Toronto}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

Nikos Tsilivis, Anastasios Tsiamis, Petros Maragos Sparsity in Max-Plus Algebra and Applications in Multivariate Convex Regression Conference Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021), Toronto, 2021. @conference{TTM21, title = {Sparsity in Max-Plus Algebra and Applications in Multivariate Convex Regression}, author = {Nikos Tsilivis and Anastasios Tsiamis and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_TsilivisEtAl_SparseTropicalRegression_ICASSP.pdf}, year = {2021}, date = {2021-06-01}, booktitle = {Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021)}, address = {Toronto}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

Nikolaos Dimitriadis, Petros Maragos Advances in Morphological Neural Networks: Training, Pruning and Enforcing Shape Constraints Conference Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021), Toronto, 2021. BibTeX | Links: [PDF] [Slides] [Video] [Poster] @conference{DM21, title = {Advances in Morphological Neural Networks: Training, Pruning and Enforcing Shape Constraints}, author = {Nikolaos Dimitriadis and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_DimitriadisMaragos_AdvancesMorphologicNeuralNets_ICASSP.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/icassp2021-slides-Dimitriadis-Maragos.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/icassp2021-presentation-Dimitriadis-Maragos.mp4 http://robotics.ntua.gr/wp-content/uploads/sites/2/icassp2021-poster_Dimitriadis_Maragos.pdf}, year = {2021}, date = {2021-06-01}, booktitle = {Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021)}, address = {Toronto}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

Kleanthis Avramidis, Agelos Kratimenos, Christos Garoufis, Athanasia Zlatintsi, Petros Maragos Deep Convolutional and Recurrent Networks for Polyphonic Instrument Classification from Monophonic Raw Audio Waveforms Conference Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021), Toronto, 2021. BibTeX | Links: [PDF] [Slides] [Video] [Poster] @conference{AvramidisIC2021, title = {Deep Convolutional and Recurrent Networks for Polyphonic Instrument Classification from Monophonic Raw Audio Waveforms}, author = {Kleanthis Avramidis and Agelos Kratimenos and Christos Garoufis and Athanasia Zlatintsi and Petros Maragos}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2021_AvramidisKratimenos_PolyphonicInstrumentClassification_ICASSP.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Avramidis_ICASSP2021_IC2_Slides.pdf http://robotics.ntua.gr/wp-content/uploads/sites/2/Avramidis_ICASSP2021_IC2_Presentation.mp4 http://robotics.ntua.gr/wp-content/uploads/sites/2/Avramidis_ICASSP2021_IC2_Poster.pdf}, year = {2021}, date = {2021-06-01}, booktitle = {Proc. 46th IEEE Int'l Conf. Acoustics, Speech and Signal Processing (ICASSP-2021)}, address = {Toronto}, keywords = {}, pubstate = {published}, tppubtype = {conference} } |

2020 |

K Kritsis, C Garoufis, A Zlatintsi, M Bouillon, C Acosta, D Martín-Albo, R Piechaud, P Maragos, V Katsouros iMuSciCA Workbench: Web-based Music Activities For Science Education Journal Article Journal of the Audio Engineering Society, 68 (10), pp. 738-746, 2020. @article{kritsis2020imuscia, title = {iMuSciCA Workbench: Web-based Music Activities For Science Education}, author = {K Kritsis and C Garoufis and A Zlatintsi and M Bouillon and C Acosta and D Martín-Albo and R Piechaud and P Maragos and V Katsouros}, url = {http://robotics.ntua.gr/wp-content/uploads/sites/2/2020_iMuSciCa-WebMusicActivitiesForScienceEducation_JAES.pdf}, doi = {10.17743/jaes.2020.0021}, year = {2020}, date = {2020-10-01}, journal = {Journal of the Audio Engineering Society}, volume = {68}, number = {10}, pages = {738-746}, keywords = {}, pubstate = {published}, tppubtype = {article} } |

Christian Werner, Athanasios C Dometios, Costas S Tzafestas, Petros Maragos, Jürgen M Bauer, Klaus Hauer Evaluating the task effectiveness and user satisfaction with different operation modes of an assistive bathing robot in older adults Journal Article Assistive Technology, 0 , 2020, (PMID: 32286163). BibTeX | Links: [Webpage] [PDF] @article{doi:10.1080/10400435.2020.1755744, title = {Evaluating the task effectiveness and user satisfaction with different operation modes of an assistive bathing robot in older adults}, author = {Christian Werner and Athanasios C Dometios and Costas S Tzafestas and Petros Maragos and Jürgen M Bauer and Klaus Hauer}, url = {https://doi.org/10.1080/10400435.2020.1755744 http://robotics.ntua.gr/wp-content/uploads/sites/2/Werner2020_EvaluatingTheTaskEffectivenessAndUserSatisfaction-AssistBathRobot_AssistTechnology.pdf}, doi = {10.1080/10400435.2020.1755744}, year = {2020}, date = {2020-07-08}, journal = {Assistive Technology}, volume = {0}, publisher = {Taylor & Francis}, note = {PMID: 32286163}, keywords = {}, pubstate = {published}, tppubtype = {article} } |